Silent, but salient: gestures in simultaneous interpreting

Abstract

Salience is regarded as one of the key concepts for cognitive studies of language and communication, however there is limited research on how prominence plays out in multimodal discourse. The present study is aimed at investigating how salience comes through in gestures used by simultaneous interpreters. To distinguish between salient and non-salient gestures by the same participant, two groups of observable parameters were chosen – basic and auxiliary. An empirical study was carried out, based on simultaneous interpreting of the audio of a TED talk (English ® Russian). The video recordings were integrated into ELAN files and annotated for salient gestures, the functions that were realized by them, and the elementary discourse units (EDUs) that the gestures co-occurred with. It was assumed that, first, salient gestures will be observed less frequently than non-salient gestures; second, prominence in gestures will serve the function of representing various aspects of a situation more often than other functions; third, salient gestures will co-occur more often with elementary discourse units (EDU) containing verb phrases, rather than noun phrases. The hypotheses were partially confirmed via quantitative and qualitative analyses which demonstrated that every third gesture was salient; the representative function came second after the pragmatic functions; there was no significant difference between the number of gestures used with verbal and nominal EDUs, though it was observed that salient gestures tend to co-occur with the verbs of physical actions and negation, as well as with the nouns accompanied by attributes denoting high degree of a quality.

Keywords: Salience, Simultaneous interpretation, Gesture, Adapter, Pragmatic gesture, Representational gesture, Deictic gesture, Beat, Elementary discourse unit

1. Introduction: Salience in gestures as a challenge for multimodal research

Over the last decades extensive study of attention has opened new ways of investigating cognitive processes that underlie a wide variety of linguistic phenomena. Salience which results from the ability of our “attentive brain” to attribute prominence to entities around us is widely used in semantics and discourse studies to explain how we mentally construe a scene and foreground objects, events, and properties with the help of various linguistic means (Talmy, 1978; Langacker, 2000; Oakley, 2009).

Focusing, profiling, foregrounding and other terms linked to prominence are regarded as key concepts in analyzing linguistic phenomena (from word-building to anaphoric binding in texts). However, despite a surge in cognitive multimodal studies in the recent years, for non-verbal means that co-occur with speech (namely, gestures), salience has been a surprisingly underresearched area. By way of background, it should be highlighted that there is a limited number of works dedicated to prominence in manual movements per se: most often salience of gestures is analyzed as an auxiliary feature related to some other kinetic, linguistic and/or cognitive phenomena manifested in both speech and gestures, such as metaphoric and metonymic mappings, iconicity, indexicality, dialogical patterns, mimetic schemas, bilingualism, creativity, etc. (McNeill, 2005; Sweetser, 2007; Cienki, Müller, 2008; Cienki, Mittelberg, 2013; Müller, 2016; Grishina, 2017; O’Connor, Cienki, 2022).

There are various kinds of issues discussed here: for instance, the relationship between eye gaze and gestures of interlocutors in a multi-party dialogue (Gullberg, Holmqvist, 1999, 2002; Oben, Brône, 2015; Fedorova, Zherdev, 2019); the interplay of metaphoricity and salience in verbal expressions and gestures (Müller, Tag, 2010); variability in salient features of gestures used by future teachers in the classroom (Tellier, Stam, Ghio, 2021); gestures of bilingual speakers (Cavicchio, Kita, 2013); salient gestures with emphatic stress (Wagner, Malisz, Kopp, 2014); recognition of salience through modelling speech-gesture co-reference resolution (Eisenstein, Barzelay, Davis, 2007); and pointing gestures and their role in foregrounding entities (Grishina, 2012).

Despite the variety of topics connected with salience in gestures, the questions of whether and how spontaneous co-speech gestures can be differentiated as salient and non-salient, and what role they play in foregrounding entities in speech, remain open. Another question is whether salience in gestures is observed in all types of communicative activities, irrespective of their forms (e.g., a dialogue or a monologue), locations (e.g., on stage or in more private off-stage surroundings), and other circumstances (e.g., professional goals, intentions, direct or mediated interactions, etc.). For instance, if we analyze less typical interactions, such as simultaneous interpretation (SI), will salient gestures be used by a speaker who is not involved in direct face-to-face communication and finds themselves “off-stage” (i.e., in an interpreter’s booth)?

Hence, the main purpose of the present study is, taking simultaneous interpretation from English (L2) into Russian (L1), to investigate how salience plays out in gestures produced by interpreters who are engaged in quite specific communicative activities. The choice of gesture salience in simultaneous interpreting as the topic for investigation presupposed a number of methodological challenges, or “tensions”, that we took into consideration – before and while analyzing the data.

(A) The first challenge is the potential lack of consistency in determining what salience is in relation to gestures. Depending on the point of view that is taken by a researcher, salient characteristics of gestures could be regarded in different ways. Thus, from the internal perspective of the speaker (i.e., the perspective of the person producing gestures) such features as exertion, control of one’s movement, speed and velocity should be accounted for and analyzed with the help of motion capture equipment (cf. the kinesiological system for gestural analysis introduced in (Boutet, 2010; Boutet et al., 2016) and described in (Cienki, 2021)). From the external viewpoint of the listener, one should focus on the eye movements to see if the speaker’s gestures fall within the central vision zone of the listener. However, as it was shown in (Gullberg, Holmqvist, 1999, 2002; Beattie et al., 2010; Fedorova, Zherdev, 2019), listeners look at approximately 7 % of speaker’s gestures, focusing more on their face. Also, due to peripheral vision, the zone of eye gaze fixation alone cannot be viewed as a reliable indicator of a gesture’s prominence for the listener. There is another point of view which is more wide-spread in gesture studies: the external perspective of a researcher who provides analyses of gestural features concentrating mainly on visually observable parameters – hand shape, orientation of the palm, direction /manner of motion, location – central or peripheral (McNeill, 1992; Bressem, 2013). All this taken together indicates that the choice of the parameters for analyzing salience in gestures is determined by the choice of the perspective.

(B) The second challenge lies in the nature of gestures that are speech-dependent, and most of the qualities attributed to gestures by researchers are acquired by them in combination with certain linguistic expressions (with the exception of emblems). So, if we rely only on the semantic and functional properties of gestures that arise from their co-occurrence with certain verbal elements, we might find ourselves in a vicious circle: regarding gestures as salient because they are synchronized with salient expressions, we can, in fact, unjustifiably transpose the quality of prominence from words to gestures. Moreover, gestures, as numerous studies have demonstrated, are highly variable, non-conventionalized entities (McNeill, 1992) that reflect the individual gestural styles (or profiles) of speakers (Iriskhanova, Cienki, 2018). This implies that a gesture that is salient for one person can be non-salient for another one (see the examples below). Thus, salience should be regarded as a relative quality and analyzed with a view to the overall gestural style of the speaker involved in a certain type of activity.

(C) This brings up to the third “tension” of analyzing salience of gestures. Interlocutors are usually unaware of their body movements that go with their spontaneous speech, and it is often difficult to decide if they produced a gesture with the intention to foreground something. Even with emphatic gestures that are inherently salient, researchers can face some difficulties because of their multifunctional nature (Cienki, 2021). Pragmatic intention to emphasize a fragment of speech which is usually associated with emphatic gestures can be combined with other pragmatic functions (e.g., warning), or with the function of representation, when a gesture foregrounds a property of an object (e.g., a gesture of banging on the table which imitates another person’s action aimed at drawing somebody else’s attention).

(D) Finally, gestures are sensitive both to the overall context of communicative activities and to the micro-changes of various aspects of the communicative situation, such as cognitive and emotional states, local intentions of the speakers, etc. In this respect, the immediate context of SI should be taken into account, bearing in mind that still little is known about the gestural behavior of interpreters and how it correlates with the specifics of this type of professional activity. It is usually characterized by cognitive overload due to the complexity of mental processes, time-pressure, and high demand on memory (Gile, 1997; Seeber, 2013; Stachowiak-Szymczak, 2019). Furthermore, SI represents secondary and mediated interaction which normally keeps an interpreter off-stage, or in the shadow of the “main speaker”. Although, as we showed elsewhere (Cienki, Iriskhanova, 2020), gestures of simultaneous interpreters play an important part in off-loading cognition, it is natural to assume that salient gestures would be scarce or would not be observed at all, as an interpreter has to quickly switch over from one language to another, and, unlike teachers or lecturers, he has no audience viewing him to “impress”.

In sum, to analyze prominence in gestures, it is important to determine what salience is from a certain viewpoint and in relation to the specifics of SI, what observable features point to a prominent character of a gesture, and how these formal features relate to the functional features of gestures and to the characteristics of speech they co-occur with.

So, we put forward the following research questions:

1) Do simultaneous interpreters use salient gestures?

2) If they do, how frequent are such gestures, and what role do they play?

3) Can any regular patterns be observed concerning the linguistic expressions they are timed with during interpretation?

2. Parameters of gesture salience in the present study: Facing the challenges

Before answering these questions, it is crucial to determine what parameters should be regarded as pointing to the salience of manual movements. To break the vicious circle we mentioned in challenge (B), we focused on the formal criteria, expanding on the ideas introduced in Müller and Tag (2010) and Cienki and Mittelberg (2013). Müller and Tag (2010) show that gestures allow speakers to foreground metaphoricity to various degrees using verbal and gestural modes. The researchers conducted microanalyses to demonstrate the “embodied experience of metaphor” (Müller, Tag, 2010: 1) through gestures that are performed in such a way that a listener cannot overlook them. These gestures are produced in a large way, within the focal attentional space between the interlocutors. They are sometimes accompanied by another gesture that additionally highlights a metaphoric expression, and if they move out of the focal space, they are followed by the listener’s gaze. Following this line of thought, Cienki and Mittelberg (2013) look into creativity in gestures and argue that spontaneous gestures are non-creative if they are “low in dynamicity, […] if they use a limited amount of space, […] and if they only involve movements of the hands and possibly the forearms” (Cienki, Mittelberg, 2013: 240). Thus, the scholars regard creativity and salience as opposed to non-creativity and backgrounding, indicating that functionally creative (=salient) gestures serve to elaborate on an idea, to synthesize it, and to comment on the speaker’s attitude towards an object.

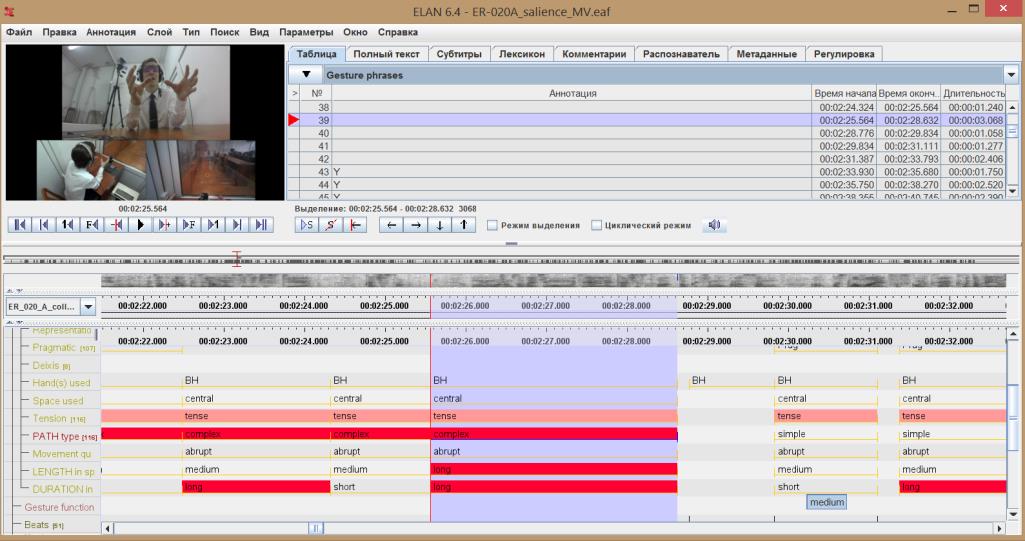

Following up on Müller and Tag (2010) and Cienki and Mittelberg (2013), we chose formal parameters of gestures to take decisions on their salience / non-salience. The parameters were applied for coding the video material (see further in section 3) and were divided into basic and auxiliary. The first group of parameters included tension (tense vs. lax), path type (complex vs. simple), length in space (long vs. short and medium), and duration in time (long vs. short). The basic features were treated as manifestation of a gesture’s salience, even if only one of them (tense, complex, or long) was observed for tension, path, length in space, or duration in time, respectively, whereas the auxiliary parameters of the hands used (both-handed vs. single-handed), space used (peripheral vs. central), movement quality (abrupt vs. smooth) were regarded as peripheral. The latter pointed to salience of gestures, if they were coupled with the basic parameters.

So, this approach allowed us to meet some of the methodological challenges mentioned in section 1, as it ensured consistency of choices about prominence of gestures from the external perspective of the researcher involved in visual analysis (challenge (A)). Taking the formal criteria as the basis for the initial stage of the research, we chose salient gestures independently from the characteristics of the speech (both semantic and pragmatic), thus overcoming the “verbal bias” in defining prominence of non-verbal kinetic entities (challenges (B, C)). We moved over to analyzing linguistic expressions at a later stage to determine the functional characteristics of salient gestures and to answer the questions about their role in the overall context of SI, and their co-occurrence with linguistic expressions in micro-contexts (challenge (D)).

3. Method of collecting and analyzing data from SI

3.1. Participants, procedure, and material

The research draws on video data elicited from eight simultaneous interpreters, native speakers of Russian, with average experience in SI of about 3 years. They were asked to interpret a ten-minute audio fragment of a TED talk from English into Russian (https://www.ted.com). The talk is devoted to the topic of the extinction of species and, according to the participants, is of medium task complexity. In addition to numbers, it contains about 20 specific terms on biodiversity that were provided to the interpreters beforehand. The circumstances of data collection were as close to the natural context of SI as possible. After providing informed consent and completing the LEAP questionnaire about exposure to languages (Marian, Blumenfield, Kaushanskaya, 2007), an interpreter was invited to a special booth used for teaching SI at the university. Apart from the usual SI equipment, there were video cameras placed in the booth, which provided three perspectives – a frontal close-up view, a view from behind, and an interpreter’s view of the surrounding objects from an eye-tracker camera. In the present study of salient gestures only the first two angles were taken into consideration. Importantly, the participants were asked not to hold anything in their hands, which was a compromise between our desire to create a most natural context for the interpreters and the aim of getting as many gestures as possible from the participants. After the session the interpreters completed questionnaires about their experience in SI, handedness, and knowledge of the topic of the source text.

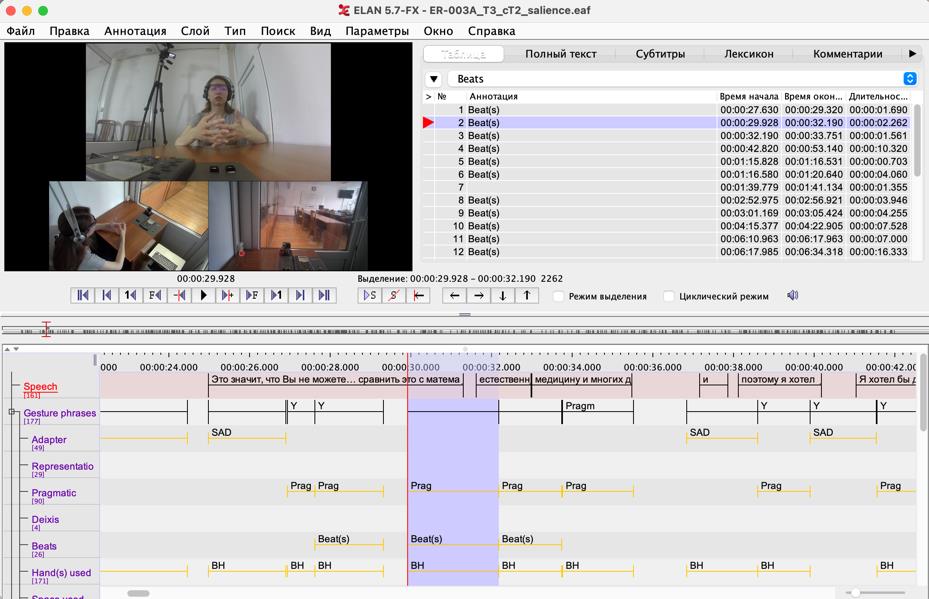

As a result, we obtained a set of video material with a duration of 85 minutes in total. The audio material of the interpreters’ speech was synchronized with the recordings from the three cameras, and together they were incorporated into ELAN (https://archive.mpi.nl/tla/elan, a software tool developed by Max Planck Institute for Psycholinguistics, The Language Archive, Nijmegen, The Netherlands). This allowed precise temporal coordination of the transcribed target text (in L1) with the annotations presented across several tiers and the triple video (Figure 1):

Figure 1. A screenshot of an ELAN file with the triple videos and the annotation tiers

Рисунок 1. Скриншот файла ELAN с тройным видео и слоями для аннотирования

3.2. Data annotation and analysis

The annotation was carried out by a team of four experienced coders. The analyses involved three stages that corresponded to the research questions: at the first stage the interpreters’ gestures were analyzed as to their salience on the basis of their formal features and irrespective of what linguistic expressions they co-occurred with. At the second stage we zoomed in on the salient gestures to investigate their functional properties, and at the third stage we analyzed co-occurrences of the salient gestures with noun phrases and verb phrases. We proceeded from the assumptions that, (a) due to the secondary, mediated and off-stage nature of SI, salient gestures will be observed less frequently than non-salient gestures; (b) prominence in gestures will serve the function of representing various aspects of a situation more often than other functions; (c) as salient gestures contribute to foregrounding dynamic aspects of a scene, they will co-occur more often with elementary discourse units (EDU) containing verb phrases, rather than noun phrases.

For testing these hypotheses, multimodal analyses were performed based on both kinetic and linguistic units – gestures and elementary discourse units that manual gestures co-occurred with. We treated the term gesture in a broader sense (cf. Kendon, 2004), thus we annotate all kinds of gestures, including adapters that, as we showed in our previous studies (Cienki, Iriskhanova, 2020), play an important part in SI. By elementary discourse units (EDU) we understand a basic segment of talk corresponding to a short clause or part of a clause (2-4 words), usually divided by small pauses and other prosodic means (Kibrik, Podlesskaya, 2009). In this study the EDUs that demonstrated temporal coincidence with a salient gesture were divided into verbal and nominal EDUs based on the presence or absence of a verb (этимязанималсяповремясвоейкарьеры (I was doing it during my carrier) vs. этоспециальноенагрузочноетестирование (this [is]a special strength test)).

Gestures were coded for salience and functions. To code salience we followed the formal parameters described in section 2: tension, path type, length in space and time for obligatory parameters and handedness, space being used, and movement quality as peripheral ones. If a gesture demonstrated at least one of the basic features, such as tension, complexity of path, or long duration, it was coded as salient. The peripheral parameters were regarded as auxiliary in determining salience because (a) use of both hands and central position were often determined by the default position for gestures, because the interpreters were seated at a special table in the booth and produced gestures over the table in front of them; (b) abruptness and smoothness of movement turned out to be difficult for differentiating, and it could result in subjective decisions, especially with the micro-movements of fingers.

As to functions, we relied on the typology of gestures in Müller (1998) and Cienki (2013), dividing gestures into the following categories: adapters (self-adapters SAD and object-adapters OAD), like rubbing fingers or touching the eye-tracker glasses; representational gestures that illustrate certain qualities of entities, like size, manner of movement, form, etc.; pragmatic gestures that show a speaker’s stance or intention (addressing the audience, expressing agreement, emphasizing, etc.); beats that mark the rhythm of the speech; deictic gestures that point at some entities, either concrete or abstract.

The annotation tiers in the ELAN files are presented in Figure 2, with obligatory salience subcategories being highlighted in red:

Figure 2. The annotation tiers as presented in an ELAN file with basic (obligatory) and peripheral (auxiliary) subcategories for gesture salience

Рисунок 2. Слои для аннотирования в файле ELAN с основными (обязательными) и периферийными (вспомогательными) подкатегориями для выделения жестов

As it is seen from Figure 2, gestures can vary in respect to how salience manifests itself through basic and peripheral parameters. First, they differ as to how many basic features of prominence are exhibited in one gesture. For example, a participant accompanies the nominal EDU оченьмногиеполитики (quite a lot of politicians) with a two-handed gesture that consists of a sequence of palm-up-open-hand (PUOH) gestures produced with shaking movements. The gesture is characterized by three basic parameters of salience – tenseness, complexity, and long duration. In contrast, in another gesture of the same speaker there is only one basic parameter of salience – complexity of path which is realized through a short sequence of lax left-hand cyclic movements. The gesture co-occurs with the verbal EDU когдаобэтомговорилвиц[е-президент] (when Vice[-President] spoke about it) (Figures 3a, b):

Figure 3a. A gesture with 3 basic parameters of salience: оченьмногиеполитики (quite a lot of politicians)

Рисунок 3а. Жест с тремя основными параметрами салиентности: очень многие политики

Figure 3b. A gesture with 1 basic parameter of salience когдаобэтомговорилвиц[е-президент] (when Vice[-President] spoke about it)

Рисунок 3b. Жест с одним основным параметром салиентности когда об этом говорил виц[е-президент]

Second, gestures differ in what kind of salience is manifested during their production. There are different types of salience for the gestures we analyzed, depending on which of the basic parameters of prominence were observed: (a) spatial salience for the gestures that took up more space than other gestures by the same person, but were lax, had a simple path, and were brief as compared to longer gestures, such as holds; (b) manner-of-movement salience for the gestures which displayed tenseness, complexity of path, and / or long duration in time; (c) mixed-type salience that is a combination of the first two.

It is also important to point out that, concerning such parameters as length in space, or duration in time, the decisions about them were made on the basis of the overall individual style of gesticulation (“gesture-lect”) of an interpreter: if, compared to other gestures produced by the same interpreter, a certain movement stood out as being longer in space and/or time, we counted such a gesture as prominent (Figures 4a, b):

Figure 4a. A salient gesture of a participant with smaller manual movements

Рисунок 4а. Салиентный жест участника с менее активной жестикуляцией

Figure 4b. A salient gesture of a participants with bigger manual movements

Рисунок 4b. Салиентный жест участника с более активной жестикуляцией

4. Salient gestures in SI: Results and discussion

The data set contains 1244 gestures, with salient gestures comprising 34 % (N = 422) and non-salient gestures – 66 % (N = 822) from the total number of gestures used by the interpreters. The overwhelming majority of salient gestures demonstrated either the manner-of-movement type of salience (77 %; N = 327) or the mixed-type salience (19 %; N = 82). The results suggest that, first, nearly a third of all the gesture being produced are salient across the data, which confirms the first hypothesis (section 3.2) that salient gestures will be used less frequently than non-salient gestures. Second, for almost all the gestures (except for 3 % of spatial-salience gestures) prominence involves tense or/and complex movements within a small space, rather than lax and “large movement”. This could be due to the circumstances of SI: cognitive overload, time-pressure, isolated (off-stage) position of the speaker and the restricted space in the SI booth afford the production of salient gestures to a lesser degree and permit less “investment” in them in terms of space.

To answer the research question about the role of salient gestures in SI, we investigated their functional properties relying on the typology offered in Müller (1998) and Cienki (2013) and presented in section 3. A quantitative analysis was performed on the salient gestures which revealed the ratio of different functions realized by the gestures within the overall amount of functional roles displayed by these gestures. The findings were compared to the non-salient gestures and their functions. Counting instances when certain roles were realized (instead of gestures), we offered a solution to quantifying multifunctional gestures – i.e., those in which several functions were combined. For example, if a gesture was characterized by two functions (e.g., representing an action and showing it to the imagined audience, which is regarded as being pragmatic), we counted it as two instances: representational and pragmatic. The distribution of functions in percentage for salient and non-salient gestures is represented in Figures 5a, b:

Figure 5a. Functions realized by the salient gestures in SI (Nfunctions = 489)

Рисунок 5а. Функции салиентных жестов в синхронном переводе (Nфункций = 489)

Figure 5b. Functions realized by the non-salient gestures in SI (Nfunctions = 884)

Рисунок 5b. Функции несалиентных жестов в синхронном переводе (Nфункций = 884)

Figures 5a, b point to the similarities and differences in the ratio of functions realized by the prominent and background gestures of the simultaneous interpreters. Thus, contrary to our second hypothesis (section 3.2), the prevalent function is pragmatic, irrespective of salience. The difference is observed between gestures of adaptation for the salient and non-salient groups (0.4 %, Nfunctions = 2 vs. 38.3 %, Nfunctions = 339), and between representational gestures for the two groups (23.9 %, Nfunctions = 117 vs. 2.6 %, Nfunctions = 23). The abundance of pragmatic gestures, both salient and non-salient, is linked to the fact that they play an important role in overcoming difficulties in SI, as they may help the interpreter to visualize the speaker (in our case a lecturer) and to blend the interpreter’s perspective with that of the person whose talk is being interpreted. The difference in the adapters could be explained by their nature: this type of manual movement, as it has been indicated in psychological studies, serves to offload cognitive functions and emotions. The dissimilarity between representational gestures of the two groups seems to have a deeper cognitive implication concerning the iconicity principles outlined in Givon (1985) and Haiman (1985): representational gestures are considered to have more conceptual purport than other types of gestures. So, according to the quantity principle of iconicity, a larger chunk of information is often given a larger chunk of code. Applied to salience of gestures, it means that multimodal miming in SI probably requires more resources to be invested. On the one hand, gestures that more explicitly convey information about an entity are more often produced within a larger space, or with a more complex path, or take up longer time (i.e., the form mimes meaning within the gestural modality). On the other hand, gestures that semantically correspond to speech modality tend to be more salient than gestures that do not (i.e., the form in one modality mimes meaning of another modality).

The quantitative analysis was supplemented by a two-way ANOVA test to check whether there is a significant difference between salience, gesture functions, and frequency of gestures showing these properties, especially with a view to individual gesture styles of the interpreters. Although the salience factor did not demonstrate statistically significant results as to gesture frequencies (F (A) = 5.623, p =.02, p > 0.01), the functional factor revealed significant difference in gesture use (F (B) = 10.194, p < 0.01) and, also, pointed to significance in interaction between salience and functional properties of gestures (F (A, B) = 4.794, p < 0.01). The latter means that the effects can be dependent on one another. However, it should be underlined that to confirm these findings and to obtain robust quantitative results, extra analyses will be needed in the future.

The third question put forward in the present study was about whether we could find any regularities in the use of the salient gestures with certain properties of linguistic expressions. For this purpose, the co-occurrences of salient gestures with verbal and nominal EDUs were investigated, and we obtained the following quantitative results: out of 422 salient gestures 231 (55 %) gestures were used with verbal EDUs, and 191 (45 %) gestures – with nominal EDUs. Although the preliminary calculations of the ratio spoke in favor of our third hypothesis (section 3.2), the t-test showed that there is no significant correlation between gestures used with verbal EDUs and gestures used with nominal EDUs (t =-0.49, p =.63, p > 0.01). At the same time the Pearson correlation test demonstrated a strong positive correlation between the two groups (r = 0.9485, p =.000328, p < 0.01), meaning that the higher the number of gestures used with the nominal EDUs, the higher the number of gestures that co-occur with the verbal EDUs, and vice versa.

On the basis of the qualitative data, we made some observations concerning the functions of salient gestures and their co-occurrence with verbal and nominal EDUs. The analysis showed that representational gestures (especially acting-out gestures) are often used with verbal EDUs that contain verbs of physical actions, including those with metaphorical meanings (перекуситьмашину – to bite through a car, переломатькость – to crush a bone, когдаприходятиуходятразличныевиды – when various species come and go). Salient pragmatic gestures are synchronized with EDUs that appeal to the audience’s background knowledge or opinion (Ну, вызнаететирекса – Well, you know Tirex), or express negation (небылинайдены – were not found). Salient representational and pragmatic gestures often co-occur with nominal EDUs in which some extraordinary features of an object are referred to (e.g., оченьвысокийуровеньвымирания – a very high rate of extinction, each of these components, оченьтолстаяптица – a very fat bird).

5. Conclusions

The aim of the study was to investigate how salience of gestures is revealed in a non-prototypical communicative activity – namely, during simultaneous interpretation. Taking into consideration the secondary, off-stage, and mediated character of SI, one could expect a limited usage of salient gestures from interpreters. At the same time, when we think about something and conceptualize it with the help of linguistic means, we always foreground some aspects of a situation. As language and gestures are tightly linked, it was reasonable to suggest that simultaneous interpreters should use at least some salient gestures that would contribute to prominence in the discourse of SI. We hypothesized that, although salient gestures would be observed less frequently as compared to non-salient gestures, they would play a significant role in iconic representation of entities and their properties. We also assumed that salient gestures, due to their dynamic nature, would co-occur more often with elementary discourse units (EDU) containing verb phrases, rather than with EDU with noun phrases.

The quantitative and qualitative analyses of SI elicited from eight interpreters (English as L2 ® Russian as L1) confirmed the first assumption that non-salient gestures outperform salient gestures. However almost a third of the gestures produced by the interpreters demonstrate such basic parameters of prominence, as visible tension, complexity of the path, length in space, and long duration in time (all of them were singled out in comparison with other gestures used by the same interpreter). The parameters were often combined, with salience of manner-of-movement (tenseness, complexity, and long duration) prevailing over the spatial parameter, which can be explained by the specifics of SI, restricted in time and space.

As to the second hypothesis, the study showed that salient gestures most frequently perform the pragmatic function of addressing the imagined audience, or expressing attitude, or emphasizing a point. The second hypothesis was not confirmed, but what spoke in favour of the representational gestures was the fact that they comprised almost 24 % of the salient gestures, as compared to 2.6 % for the non-salient gestures. A two-way ANOVA test showed that, although the difference between gesture frequencies for the salient and non-salient groups was not statistically significant, there is a statistically strong difference between gestures with various functions.

The third hypothesis about the prevalence of salient gestures with verbal EDUs over the ones with nominal EDUs was not statistically confirmed, although gestures with verbs amounted to 55 % of the salient gestures. The qualitative analysis allowed for preliminary observations about some semantic and pragmatic characteristics of the verbal and nominal EDUs accompanied by salient gestures. Thus, salient gestures seem to be “attracted” by verbs of physical actions, both with direct and metaphorical meanings, verbs appealing to the audience’s background knowledge, and verbs of negation. Used with nominal EDUs, the salient gestures co-occur with expressions denoting some extraordinary features of objects and events (size, speed, etc.).

Despite the constraints of the research linked to a restricted number of participants, the overall amount of cases was representative enough to obtain interesting findings about the use of salient gestures in non-typical secondary interactions, such as simultaneous interpreting. The frequency and functional variety of prominent gestures indicate that in SI salience is cross-linguistic and cross-modal – i.e., it is realized between languages and through different means available to the speaker. It also supports the idea that salience is determined by the ways objects and events are construed in SI discourse, as well as by the ways an interpreter conceptualizes the context of the source text, and, importantly, the speaker’s communicative behaviors. The research of salient gestures in SI will continue beyond this paper: for instance, the findings suggest that it would be worth investigating the interrelations between cross-modal salience employed in the source and the target discourse.

Thanks

The research was carried out at Moscow State Linguistic University and supported by the Russian Science Foundation (Grant No 19-18-00357)

Reference lists

Beattie, G., Webster, K. and Ross, J. (2010). The fixation and processing of the iconic gestures that accompany talk, Journal of Language and Social Psychology, 29 (2), 1-30 https://doi.org/10.1177/0261927X09359589(In English)

Boutet, D. (2010). Structuration physiologique de la gestuelle: Modèle et tests, Lidil, 42, 77-96. https://doi.org/10.4000/lidil.3070(In English)

Boutet, D., Morgenstern, A. and Cienki, A. (2016). Grammatical aspect and gesture in French: A kinesiological approach, Russian Journal of Linguistics, 20 (3), 132-151. (In English)

Bressem, J. (2013). A linguistic perspective on the notation of form features in gestures, in Müller, C., Cienki, A., Fricke, E., Ladewig, S., McNeill, D. and Teßendorf, S. (eds.), Body – Language – Communication: An international handbook on multimodality in human interaction, Vol. 1, Mouton de Gruyter, Berlin, Germany, 1079-1098. (In English)

Cavicchio, F. and Kita, S. (2013). Bilinguals switch gesture production parameters when they switch languages, Proceedings of the Tilburg Gesture Research Meeting (TIGeR 2013), Tilburg University, Netherlands, 305-309.(In English)

Cienki, A. (2013). Cognitive linguistics: Spoken language and gesture as expressions of conceptualization, in Müller, C., Cienki, A., Fricke, E., Ladewig, S., McNeill, D. and Teßendorf, S. (eds.), Body – Language – Communication: An international handbook on multimodality in human interaction, Vol. 1, Mouton de Gruyter, Berlin, Germany, 182-201. https://doi.org/10.1515/9783110261318.182 (In English)

Cienki, A. (2021). From the finger lift to the palm-up open hand when presenting a point: A methodological exploration of forms and functions, Languages and Modalities, 1 (1), 17-30, https://doi.org/10.3897/lamo.1.68914(In English)

Cienki, A. and Iriskhanova, O. K. (2020). Patterns of multimodal behavior under cognitive load: An analysis of simultaneous interpretation from L2 to L1, Voprosy Kognitivnoy Lingvistiki, 1, 5-11. https://doi.org/10.20916/1812-3228-2020-1-5-11(In English)

Cienki, A. and Mittelberg, I. (2013). Creativity in the forms and functions of spontaneous gesture with speech, in Veale, T., Feyaerts, K. and Forceville, C. (eds.), The Agile Mind: A Multi-disciplinary Study of a Multi-faceted Phenomenon, Mouton de Gruyter, Berlin, Germany, 231-252. (In English)

Cienki, A. and Müller, C. (2008). Metaphor, gesture and thought, in Gibbs, R. W. Jr. (ed.), The Cambridge Handbook of Metaphor and Thought, Cambridge University Press, Cambridge, UK, 484-501. (In English)

Eisenstein, J., Barzilay, R. and Davis, R. (2007). Turning lectures into comic books using linguistically salient gestures, Proceedings of the Twenty-Second AAAI Conference on Artificial Intelligence, Vancouver, British Columbia, Canada, 877-882. (In English)

Fedorova, O. V. and Zherdev, I. Ju. (2019). Follow the hands of the interlocutor! (On strategies for the distribution of visual attention), Experimental Psychology, 12 (1), 98-118. https://doi.org/10.17759/exppsy.2019120108 (In English)

Gile, D. (1997). Conference interpreting as a cognitive management problem, in Danks, J. H., Fountain, S. B., McBeath, M. K. and Shreve, G. M. (eds.), Cognitive Processes in Translation and Interpreting, Sage Publications, Thousand Oaks, London, New Delhi, India, 196-214. (In English)

Givon, T. (1985). Iconicity, Isomorphism, and non-arbitrary coding in syntax, in Haiman, J. (ed.), Iconicity in Syntax, Benjamins, Amsterdam, The Netherlands, 187-219. (In English)

Grishina, E. A. (2017). Russkaya zhestikulyaciya s lingvisticheskoj tochki zreniya (korpusnye issledovaniya) [Russian gesticulation in linguistic aspect: Corpus studies], Yazyki slavyanskoj kul'tury, Izdatel'skij Dom YASK, Moscow, Russia. (In Russian)

Gullberg, M. and Holmqvist, K. (1999). Keeping an eye on gestures: Visual perception of gestures in face-to-face communication, Pragmatics & Cognition, 7 (1), 35-63. https://doi.org/10.1075/pc.7.1.04gul (In English)

Gullberg, M. and Holmqvist, K. (2002). Visual attention towards gestures in face-to-face interaction vs. on screen, in Wachsmuth, I. and Sowa, T. (eds.), Gesture and Sign Language Based Human-Computer Interaction, Springer-Verlag, Berlin, Germany, 206-214. (In English)

Haiman, J. (1985). Natural Syntax, Cambridge University Press, Cambridge, UK. (In English)

Iriskhanova, O. K. and Cienki, A. (2018). The semiotics of gestures in cognitive linguistics: Contributions and challenges, Voprosy Kognitivnoy Lingvistiki, 4, 25-36. https://doi.org/10.20916/1812-3228-2018-4-25-36(In English)

Kendon, A. (2004). Gesture: Visible action as utterance, Cambridge University Press, Cambridge, UK. (In English)

Kibrik, A. A. and Podlesskaya, V. P. (eds.) (2009). Rasskazy o snovideniyakh: korpusnoye issledovaniye ustnogo russkogo diskursa [Nightdream stories: case study of Russian spoken discourse], Languages of Slavic culture, Moscow, Russia. (In Russian)

Langacker, R. (2000). Grammar and Conceptualization, Mouton de Gruyter, Berlin, Germany. (In English)

Marian, V., Blumenfield, H. K. and Kaushanskaya, M. (2007). The language experience and proficiency questionnaire (LEAP-Q) assessing language profiles in bilinguals and multilinguals, Journal of Speech, Language, and Hearing Research, 50, 940-967. https://doi.org/10.1017/S1366728919000038 (In English)

McNeill, D. (1992). Hand and mind: What gestures reveal about thought, University of Chicago Press, Chicago, USA. (In English)

McNeill, D. (2005). Gesture and thought, University of Chicago Press, Chicago, USA. (In English)

Müller, C. (1998). Redebegleitende Gesten. Kulturgeschichte – Theorie – Sprachvergleich [Speech-accompanying gestures. Cultural history – theory – language comparison], Berlin Verlag, Berlin, Germany. (In German)

Müller, C. (2016). From mimesis to meaning: A systematics of gestural mimesis for concrete and abstract referential gestures, in Zlatev, J., Sonesson, G. and Konderak, P. (eds.), Meaning, mind and communication: Explorations in cognitive semiotics, Peter Lang, Frankfurt am Main, Germany. (In English)

Müller, C. and Tag, S. (2010). The dynamics of metaphor: Foregrounding and activating metaphoricity in conversational interaction, Cognitive Semiotics, 10 (6), 85-120. https://doi.org/10.1515/cogsem.2010.6.spring2010.85 (In English)

O’Connor, M. and Cienki, A. (2022). The materiality of lines: The kinaesthetics of bodily movement uniting dance and prehistoric cave art, Frontiers in Communication, 7. https://doi.org/10.3389/fcomm.2022.956967(In English)

Oakley, T. (2009). From attention to meaning: explorations in semiotics,Linguistics and Rhetoric, Peter Lang, Bern–Berlin, Germany. (In English)

Oben, B. and Brône, G. (2015). What you see is what you do: on the relationship between gaze and gesture in multimodal alignment, Language and Cognition, 7 (04), 546-562. https://doi.org/10.1017/langcog.2015.22(In English)

Seeber, K. G. (2013). Cognitive load in simultaneous interpreting, Target, 25 (1), 18-32. https://doi.org/10.1075/target.25.1.03see (In English)

Stachowiak-Szymczak, K. (2019). Eye movements and gestures in simultaneous and consecutive interpreting, Springer, Basel, Switzerland. (In English)

Sweetser, E. (2007). Looking at space to study mental spaces: Co-speech gesture as a crucial data source in cognitive linguistics, in Gonzalez-Marquez, M., Mittelberg, I., Coulson, S. and Spivey, M. J. (eds.), Methods in Cognitive Linguistics, John Benjamins, Amsterdam, Netherlands, 201-224. (In English)

Talmy, L. (1978). Figure and ground in complex sentences, in Greenberg, J. (ed.), Universals of Human Language, Stanford University Press, Stanford, USA, 625-649. (In English)

Tellier, M., Stam, G. and Ghio, A. (2021). Handling language: How future language teachers adapt their gestures to their interlocutor, Gesture, 20 (1), 30-62. https://doi.org/10.1075/gest.19031.tel (In English)

Wagner, P., Malisz, Z. and Kopp, S. (2014). Gesture and speech in interaction: An overview, Speech Communication, 57, 209-232. https://doi.org/10.1016/j.specom.2013.09.008 (In English)