Когнитивное вовлечение в научной письменной речи: эмпирические данные о вариативности восприятия научных данных

Aннотация

В исследовании рассматривается влияние активного и пассивного залога на понимание научных статей читателями с различным уровнем владения языком. Работа устраняет существующий пробел в знаниях о том, как языковой стиль может влиять на интерпретацию научных данных. Методология включала предварительный опрос, задание на понимание прочитанного (вопросы с множественным выбором, открытые и краткие вопросы на воспроизведение информации), а также итоговый опрос. В исследовании приняли участие 50 человек, которые были равномерно распределены между двумя группами: респонденты первой группы читали тексты в активном залоге (AV), второй – в пассивном залоге (PV). Анализ проводился с использованием описательных статистических данных и охватывал такие параметры, как фактическое понимание текста, субъективное восприятие понятности, удовлетворённость чтением, восприятие достоверности представленного материала и предпочтения в отношении стиля изложения. Согласно результатам исследования, участники группы AV продемонстрировали более высокое общее понимание прочитанного, особенно в части вопросов, требующих краткого воспроизведения информации, где их баллы были значительно выше. Также они отметили более высокое субъективное понимание текста и большее удовлетворение от чтения. В то же время участники группы PV сочли содержание статьи более достоверным. На основе полученных данных авторы формулируют три ключевые рекомендации: использовать активный залог для повышения понимания сложных описаний; применять активный залог для лучшего запоминания конкретной информации, основанной на фактических данных; использовать пассивный залог для повышения субъективной достоверности. Исследование ограничено небольшим объемом выборки и использованием только одной статьи для каждой группы участников, что может повлиять на степень применимости полученных результатов к другим текстам. Результаты исследования представляют интерес для исследователей, редакторов и научного сообщества в целом, подчёркивая важность баланса между использованием активного и пассивного залога в научных текстах для обеспечения их понятности и доступности.

Ключевые слова: Активный залог, Пассивный залог, Научная статья, Научный текст, Научное письмо, Когнитивное вовлечение

К сожалению, текст статьи доступен только на Английском

1. INTRODUCTION

Scientific communication is an essential aspect of advancing knowledge in various fields, enabling researchers to share their findings with peers, policymakers, and the general public. The effective communication of research results is therefore indispensable for the advancement of knowledge and the formulation of evidence-based decisions and policies.

One of the primary components of scientific writing, and a subject of ongoing debate, is the use of voice – whether active or passive. As discussed in Wanner’s (2009) study, the use of voice in scientific writing is far more than just a grammatical choice. It directly influences the clarity and overall comprehensibility of the text, which has led the academia to ponder over the use of active versus passive voice in scientific texts, with proponents on both sides.

Ferreira (2021) asserts that passive voice has been widely criticized for resulting in dense, indirect, and evasive writing, but contends that this voice is actually a valuable and grammatically correct tool that writers should use, debunking several of the misconceptions associated with it. On the other hand, Leong (2020) and Inzunza (2020) support the use of the active voice, claiming it offers clarity and conciseness in scientific writing. They point out the preference towards it on the part of major scientific journals and claim that the active voice trend is now pervasive in scientific literature. Notwithstanding, they also reflect on its weaknesses, such as its potential to sound colloquial and unsophisticated. Meanwhile, Minton (2015) and Hudson (2013) call for a more balanced use of both voices. Minton (2015) argues that while the passive voice may be less clear, less direct, and less concise, it has its own utility and appropriateness in certain contexts. Hudson (2013) similarly suggests that both voices have their place in scientific writing, underlining the ongoing dispute in the scientific community regarding the role of voice in technical writing.

Previous studies have also predominantly focused on scrutinizing the use of voices in different academic disciplines (e.g., Solomon et al., 2022), as part of readability formulas (e.g., Plavén-Sigray et al., 2017; Bailin and Grafstein, 2001), or in terms of the diachronic assessment of their application in scientific writing (e.g., Leong, 2020). The specific effects of active versus passive voice on research article comprehension among readers with varying language proficiency levels will be assessed in this study in an effort to add to this conversation. To accomplish this, the study includes a participant pool with a range of linguistic skills in an effort to provide a new viewpoint on the voice debate. Additionally, the study advances the systemization of findings pertaining to understanding, recall, and retention of both general concepts and specific information.

In order to achieve these goals, the current study also takes into account how various grammatical decisions may affect cognitive engagement, which is a term used to describe the mental effort a person puts into processing, interpreting, and integrating information. We interpret engagement as distinct forms of involvement with the text, such as attention, elaboration, and recall, rather than regarding it as a single, observable outcome. These aspects are pertinent to the study’s investigation since they are incorporated into the comprehension tasks’ design.

To ensure that the results could be applied more broadly, the study concentrated exclusively on English-language research. Since English is widely used in scientific research worldwide, the study’s conclusions are more likely to be relevant to a wide range of researchers, academic institutions, and publishers. Examining how active and passive voice affect comprehension and memory in English-language research articles is especially important for non-native speakers, who might have more difficulty understanding unfamiliar or complex language.

2. THEORETICAL BACKGROUND

The discourse surrounding the active versus passive voice in scientific writing requires a close examination of arguments that drive preferences for one or the other.

The utility of active voice in scientific writing is widely supported by numerous studies with the active voice trend attributed partially to an emerging interdisciplinary field known as “plain language studies” that focuses on making written and spoken communication accessible and understandable to the general public. The field is characterized by a collaborative approach, where individuals from diverse fields such as linguistics, psychology, law, education, and communication come together to promote plain language. The goal of plain language studies is to eliminate language barriers that prevent people from accessing information they need to make informed decisions. This includes documents such as legal contracts, government forms, medical instructions, and financial disclosures, which, if made easier to comprehend, can help reduce confusion, misunderstandings, and errors. Plain language studies involve the development of plain language guidelines, professional standards, and accreditation programs providing a framework for writers and editors to create clear and understandable documents that meet the needs of their readership.

Research on the issue of plain language in scientific communication typically zones in on four key areas of focus.

1. The favorable impact of plain language on public engagement with science, with studies indicating people’s increased willingness to read and share a science-related news article written in plain language than when it was written in technical language (see Kerwer et al., 2021).

2. The favorable impact of plain language on health literacy in that it makes health information more accessible and understandable to patients. To support this idea, a study by Zarcadoolas (2011) has proved that using plain language in patient education materials improves patients’ understanding of their health conditions and treatment options.

3. The important role of plain language in science communication during crisis events, such as natural disasters or disease outbreaks, that call for a quick and accurate communication of information to the public. Looking into this, studies have credibly established that using plain language in crisis communication was associated with increased trust and understanding among the public (see Temnikova et al., 2015).

4. The impact of cultural and linguistic differences on plain language communication, with considerations suggesting that the effectiveness of plain language communication may vary across different cultural and linguistic contexts (Tamimy et al., 2022). For example, a study by Yousef et al. (2014) was able to show that cultural background was a factor in reading comprehension, with some groups benefiting more than others.

In the context of scientific communication, the trend towards the use of active voice as a criterion of plain language in scientific writing has gained momentum over the years, with various studies advocating for its use owing to its directness, clarity, brevity, and evidenced propensity to increase comprehension of research findings. For example, a study by Stoll et al. (2022) found that plain language summaries, that are predicated upon using active rather than passive voice among other things, were more effective in promoting comprehension than abstracts written in even a slightly more technical language. The same was found to be true for the retention and recall of information. For example, Kaphingst et al. (2012) singled out the use of active voice as a key element of plain language summaries of cancer-related research articles and showed that these were more effective in promoting retention among cancer patients.

Tarone et al. (1998) explored the logical argument papers that have unique rhetorical structures where the active voice plays a central role. The authors proposed that within this structure, the use of “we” indicates the author’s procedural choice, distinguishing it from the established or standard procedures usually conveyed through the passive voice. “We” plus an active verb is also used to describe the author’s own work, providing a contrast to the work of others which is typically described in the passive voice. However, when the work of others is not being contrasted with the author’s work, the active voice is used. The study suggests that these uses of active voice extend to papers in the majority of fields, particularly those where that subject matter doesn’t lend itself to experimentation. The authors propose that the rhetorical style used in fields that frame their papers as logical arguments can find the active voice to be just as applicable and beneficial. Additionally, they review evidence that suggests the use of active voice in scientific papers is not limited to English, acknowledging that papers written in Russian appear to use the equivalent of active and passive voice in a similar way. This indicates a potential universality in the application of active voice in scientific writing across languages.

Cheung and Lau (2020) examine the use of active voice in scientific writing across various disciplines and focus on the deployment of first-person pronouns, a prominent feature of the active voice, in establishing an authorial voice and bolstering arguments. Examining expert writers from the fields of Literature and Computer Science, Cheung and Lau (2020) hypothesize a varying degree of first-person pronoun use. They posit that Literature writers, in the absence of objective facts, frequently use first-person pronouns and assume stronger authorial roles to build credibility and persuade readers. Meanwhile, they suggest that Computer Science writers conventionally shun the use of first-person pronouns, aligning with traditional norms in the hard sciences. The researchers’ findings challenge this general dichotomy in pronoun usage between hard and soft sciences and suggest that the conventional wisdom of avoiding first-person pronouns in hard sciences like Computer Science may not apply universally. In essence, the use of active voice, characterized by first-person pronouns, is not strictly confined to a specific scientific genre or discipline. These findings testify to the importance of the active voice in scientific writing, not as a matter of stylistic preference, but as a vital tool for building credibility and persuading readers (Tikhonova and Mezentseva, 2024).

In the study titled “How passive voice weakens your scholarly argument”, Sigel (2009) provides compelling arguments on how the use of active voice strengthens scholarly argumentation and contributes to clarity in scientific writing. Drawing on his 12 years of experience in academic publishing, Sigel (2009) suggests that by avoiding passive constructions in scientific writing, scholars can demonstrate a more comprehensive understanding of the material, with the underlined focus on precision. The author emphasizes the need for scholars to use active voice in their scientific writing while acknowledging that there can be appropriate contexts for using its counterpart.

Thus, a host of research works lean in favor of the active voice in scientific writing. They provide evidence-based arguments that active voice enhances clarity, increases comprehension, promotes better retention of information, and even fosters a sense of engagement between authors and readers. While it is not a one-size-fits-all solution, these studies point to the potential benefits of using active voice strategically in research writing to improve the accessibility and impact of scientific findings.

Yet, despite the potential benefits of giving preference to active voice to support plainer language in scientific communication, there are barriers to its overwhelming adoption, including the perceived need for technical language to establish credibility and expertise, as well as a perception that simpler scientific narratives may oversimplify research findings, leading to misinterpretations. The role and place of complex language structures in scientific communication – such as complex syntax, use of passive voice, nominalization, and jargon – have been extensively studied to identify their contribution to varying degrees of complexity, as well as their implications (see Leskelä et al., 2022; Turfler, 2015; Bonsall et al., 2017; Schriver, 2014; Akopova, 2023; Balashov et al., 2021). Other topics of inquiry include lexical bundles and vocabulary, genre analysis, rhetorical moves, etc.

In this vein, scholars are coming up with arguments supporting the use of passive voice despite the increasing push for active voice. For example, Ferreira (2021) makes a strong defense for the passive voice, arguing that it provides a means to maintain topic continuity, accommodate accessible concepts, and avoid distorting the author’s message that might occur with active sentence paraphrases. The author also asserts that the guidelines discouraging passive sentences might lead to confusion, as many individuals struggle to correctly identify them.

The study by Leong (2020) indicates a historical prevalence of the passive voice, as it notes an increase in its use from the 17th to the 20th century. While this study found a decline in passive voice use in the modern era, the stability of its use from 1880 to 1980 demonstrates its long-standing relevance in scientific communication.

Inzunza (2020), though advocating for the active voice, acknowledges that the passive voice can contribute to a sense of objectivity in scientific writing, centering on the actions rather than the individuals. This demonstrates the role of passive voice in depersonalizing scientific discourse, putting an emphasis on the process or results over the actors.

Minton’s (2015) study refutes the common arguments against the passive voice, contending that in certain contexts passive voice usage is more appropriate than active voice. According to Minton (2015), decisions regarding voice selection often come down to the order of words in a sentence, with the “old” information typically taking the subject position and “new” information following, a pattern that often aligns with passive constructions.

Wanner’s (2009) book exposes the significant role of the passive voice in shaping scientific discourse. The work further explores how changes in scientific rhetoric have led to the emergence of active voice constructions that compete with the passive without having a more visible agent, which indicates the fluid nature of voice use in scientific writing.

Ding (2002) presents a compelling perspective that the use of the passive voice in scientific writing reflects the social values of the scientific community. As passive constructions focus on objects, methods, or results rather than individuals, they can de-emphasize the discrete nature of experiments and lay the ground for a cooperative enterprise among scientists. The author posits that the use of the passive voice is more than a personal stylistic choice, but rather a reflection of the professional practices of the scientific community.

The ongoing debate on the use of active and passive voice also logically encompasses arguments for a balanced approach. The evolution towards a balanced approach to active and passive voice usage in scientific writing is the focus of a study by Staples et al. (2016). In their extensive corpus-based analysis of scientific writing in different disciplines, the researchers contend that the traditional dichotomy between active and passive voice is oversimplified. They argue that the effective use of voice in scientific writing is not merely about choosing between active or passive, but rather about deploying a combination of active and passive voice purposefully depending on the rhetorical context and intent.

Hudson’s (2013) analysis provides an exploration of the “technical voice” in scientific writing, which appears to be a contentious term that embodies the persisting discord over the role of voice in technical writing, both grammatically and idiosyncratically. He states that many literary critics and English usage experts favor active voice due to its directness, vigor, and conciseness. This is also concurred upon by many proponents of concise writing in the scientific community. However, the consensus usually accompanies a caveat, suggesting authors should use passive voice in experimental sections to portray objectivity. In Hudson’s (2013) perspective, the “technical voice” seems to be an amalgamation of the active and passive voices, an “impossible combination” where the author strives for conciseness without employing the first-person pronouns. This hints at the complexities surrounding voice in scientific writing, where authors often juggle between the need for clarity (active voice) and the desire for objectivity (passive voice).

Erdemir (2013) provides a practical viewpoint on the use of voice in the materials and methods section of scientific articles, asserting that it can be written in either active or passive voice in the past tense, bringing to the fore the need for “reproducible results”. The need to balance active voice with passive voice, particularly in certain sections of scientific articles such as the materials and methods, attests to the contextual nature of voice in scientific writing.

Some of the works cited above address rhetorical structure and academic writing conventions. These serve primarily to contextualize the role of voice in scientific discourse. The present study, however, focuses on the cognitive perspective to show how grammatical voice influences reader comprehension and recall.

To summarize, the use of active voice in scientific writing is widely supported for its contribution to clarity and directness. However, the discussion surrounding “technical voice” and the balancing act between active and passive voice suggests that the use of voice in scientific writing is far from being monolithic. It instead entails a strategic use of both voices depending on the context, the section of the research article, and the aim of communication. As our study will further suggest, it is also imperative to consider the audience, their language proficiency, and probable reader perceptions when using simplified vs complex language in scientific communication, which we intend to address in detail.

In addition, the present study also touches upon the cognitive plane of reading scientific prose. Specifically, it draws attention to the notion of cognitive engagement, which is understood here as a mode of processing evidenced through performance in comprehension, recall, and summarization tasks. Hence, cognitive engagement is viewed as part as the task structure. Our approach is informed by the ICAP framework (Chi & Wylie, 2014), which distinguishes between passive, active, constructive, and interactive forms of engagement depending on the reader’s behavioral and cognitive involvement with the material. In this typology, multiple-choice questions typically correspond to passive or minimally active processing, and open-ended summaries and short-answer recall tasks engage higher-order operations such as synthesis, reorganization, and targeted retrieval. We aim to indirectly observe how voice construction may affect the level of engagement with scientific texts by distributing task types, particularly among readers with varying linguistic backgrounds.

3. MATERIALS AND METHODS

3.1. Participants

The methodology for this study centered around respondent survey. Participants within the 18-30 age range were recruited among students of a large higher educational institution and included respondents with a range of English language proficiency levels, including both native and non-native English speakers, to improve the applicability of the results to a broader audience. Group composition was balanced with respect to age range, gender, and academic specialization. This research design aimed to represent a diverse population and a variety of language backgrounds, which is reflective of the readership of research articles.

Fifty respondents were randomly assigned to either the active voice (AV) or passive voice (PV) group and provided with access to their assigned version of a research article. To be eligible for the study, participants had to have a knowledge of English, have no history of language or cognitive impairments, and be at least 18 years old.

3.2.Materials

The study employed two versions (AV vs PV) of a research article, each focusing on the effects of caffeine on cognitive performance, a topic of frequent investigation in the fields of cognitive and nutritional science. The articles were approximately 500-words long, with similar content and structure.

The article used as stimulus material in this study was adapted from existing literature and rewritten to control for length, structure, and comparability between the active and passive voice versions. The resulting texts were standardized in terms of topic, vocabulary, and syntactic complexity, and were not taken verbatim from any single published source.

The passive voice version of the article was initially drafted, after which an active voice version was produced by systematically converting passive constructions into active ones, while preserving semantic content, clause structure, and information sequence.

The complexity of the articles, both in terms of vocabulary and sentence structure, was intentional. Since scientific literature routinely demands a certain level of technical language and complex structures to precisely convey experimental methodology, data interpretation, and subsequent conclusions, these articles were designed to reflect the kind of texts that individuals often encounter in real-world scientific literature. This approach aimed to provide a more accurate measurement of the effects of active and passive voice on understanding in an applied context.

Moreover, the complexity level of the articles was carefully managed. Both articles were designed to be of similar difficulty, utilizing scientific terminology and complex structures common in such texts, without becoming excessively convoluted or inaccessible. This was confirmed through pre-tests with a small group of individuals, to ensure equivalent difficulty and readability between the two versions. The assessment of complexity during the pre-test phase relied on participant feedback regarding perceived difficulty as well as checks for equivalent comprehension scores across both versions.

3.3.Procedure

Pre-test survey. Participants completed a pre-test survey that measured their level of language proficiency and general reading ability. Participants were asked to self-report their English proficiency using standard categories (beginner, intermediate, advanced, native), and these self-assessments were verified against institutional academic records, specifically participants’ most recent English language course grades.

Reading. Participants were given access to their assigned version of the article and were instructed to read the article carefully. To ensure a fair protocol, where all participants are given equal opportunities as well as placed under equal constraints, a time limit was set for this part of the procedure. Since the optimal reading speed for comprehension is about 200-300 words per minute (Brysbaert, 2019), a time limit of 2 to 3 minutes could be appropriate for participants to read and comprehend a 500-word article fully. However, individual reading speeds may vary, and some participants may require more or less time to complete the task, especially considering the different levels of language proficiency among the participants. To account for this and to provide the participants with the opportunity to re-read the article for clarity, the time limit was set at 8 minutes. The participants were offered the option to stop reading once they felt they had fully comprehended the article. This helped ensure that they were not rushed and could take their time to fully understand the content.

Comprehension tasks. After reading the article, participants were asked to complete a series of tasks related to the content of the article. These tasks included multiple-choice comprehension questions, open-ended questions requiring them to summarize the main points of the article, and short-answer recall questions.

The purpose of multiple-choice questions in this study is to provide a standardized and structured way to assess participants’ general comprehension of article content. The benefit of multiple-choice questions is that they provide a more objective way of evaluating comprehension and can be scored more easily and efficiently than open-ended or short-answer recall questions. Additionally, multiple-choice questions served as a warm-up for participants, allowing them to engage with the article’s content and assess their level of comprehension before moving on to more complex tasks such as open-ended or short-answer recall questions.

Open-ended questions were designed to test participants’ ability to summarize the main points of the article in their own words. These questions were broader and didn’t have a specific answer. The purpose of these questions was to measure participants’ ability to understand and retain the key concepts presented in the article.

Short-answer recall questions, on the other hand, were designed to test participants’ memory of specific details from the article. These questions were more focused and required a specific answer, such as a name, a date, a figure, or a fact. The purpose of these questions was to measure participants’ ability to recall specific information from the article.

Post-test survey. Participants completed a post-test survey that measured their perceived level of understanding of the article, their overall satisfaction with the reading experience, the perceived credibility of article content, and their preference for the language style in scientific writing in general. This type of data was gathered to complement the objective measures of comprehension and retention. The questions in the post-test survey can illuminate relevant perceptions, which can help contextualize the results of the comprehension tasks.

3.4. Data analysis

Following from the established study procedure, three sets of data were eligible for the analysis: (1) pre-test survey data – language proficiency level and general reading ability; (2) comprehension task data – scores on multiple-choice comprehension questions, open-ended questions, and short-answer recall questions; (3) post-test survey data – responses to questions on perceived level of understanding, overall satisfaction with the reading experience, perceived credibility of article content, and preference for language style.

Pre-test survey data on language proficiency level were analyzed using descriptive statistics to describe the pool of participants in terms of average language proficiency level of each group using discrete variables (beginner N, intermediate N, advanced N, native N). Pre-test survey data on general reading ability was assessed using the Nelson-Denny Reading Test that measures vocabulary and comprehension skills and has established norms for different age groups.

To analyze the answers to the ten multiple-choice comprehension questions, we calculated the percentages, means and standard deviations of correct responses for each group followed by a t-test analysis to determine if there was a statistically significant difference.

For the six open-ended questions, we used a coding system to categorize the responses into different categories. Two independent coders were assigned to each response and coded the responses based on pre-identified categories. Any disagreements were resolved through discussion and consensus. Once the coding was completed, the data was analyzed using descriptive statistics, including a t-test analysis, to identify the most frequently occurring themes or categories in the responses. The following codes were used: (1) accurate understanding – the response accurately reflects the main points and ideas presented in the article; (2) partial understanding – the response reflects some but not all of the main points and ideas presented in the article; (3) misunderstanding – the response misinterprets or misrepresents the main points and ideas presented in the article; (4) personal reflection – the response shares a personal opinion or reaction to the content of the article, but does not necessarily demonstrate comprehension of the article itself; (5) off-topic – the response does not address the content of the article at all; (6) other – any other category that may emerge from the data and reflects a distinct type of response.

For the ten short-answer recall questions, we analyzed the responses by scoring each answer as either correct or incorrect. The percentages, means and standard deviations of correct answers for each group were then calculated, followed by a t-test analysis to reveal statistically significant difference, if any.

To analyze the post-test survey data, we summarized the responses to the Likert scale questions. Each question was analyzed separately, and the results were reported in terms of the frequency of responses for each scale point.

To measure the perceived level of understanding, we asked participants to rate their level of understanding of the article on a 5-point Likert scale (1 = very poor, 2 = poor, 3 = fair, 4 = good, 5 = very good).

To measure overall satisfaction with the reading experience, we asked participants to rate their level of satisfaction with the article on a 5-point Likert scale (1 = very dissatisfied, 2 = dissatisfied, 3 = neutral, 4 = satisfied, 5 = very satisfied).

To measure perceived credibility of article content, we asked participants to rate the level of its credibility on a 5-point Likert scale (1 = highly lacking in credibility, 2 = lacking in credibility, 3 = fairly credible, 4 = credible, 5 = very credible).

To measure preference for language style, we asked participants to rate their preference for either the active voice or passive voice on a 5-point Likert scale (1 = strongly prefer active voice, 2 = prefer active voice, 3 = no preference, 4 = prefer passive voice, 5 = strongly prefer passive voice). This question was not necessarily linked to the respondents’ experience participating in the present study, but rather to their general personal experience of reading scientific research.

4.Results

4.1.Pre-test survey data results

The pre-test survey provided data on the language proficiency levels and general reading abilities of the participants in the study. A total of 50 participants were recruited and assigned to either the AV or PV group, with 25 participants in each group.

Language proficiency levels were self-reported by the participants and verified against their English class academic records. The distribution of language proficiency levels among the 50 participants was as follows: beginner N=8 (16%), intermediate N=26 (52%), advanced N=14 (28%), native N=2 (4%). After that, the participants were assigned to each of the voice groups with an equal number of participants (beginner N=4/4, intermediate N=13/13, advanced N=7/7, native N=1/1) in each group, ensuring a balanced distribution of language proficiency levels between the two groups. This balance allows for a fair comparison of the potential influence of language proficiency on the comprehension of research articles for both groups.

For general reading ability, based on the pre-test survey data using the Nelson-Denny Reading Test, the mean score for the 50 participants in the study was 63.4, with a standard deviation of 6.5. The scores ranged from 50.1 to 90.1, with two native speakers scoring above 80. Since scores on the Nelson-Denny Reading Test are typically standardized to a mean of 50 and a standard deviation of 10, an average score of 63.4 indicates that the participants in this study scored above average on the reading test, with some variability in scores among the group.

4.2.Comprehension task data results

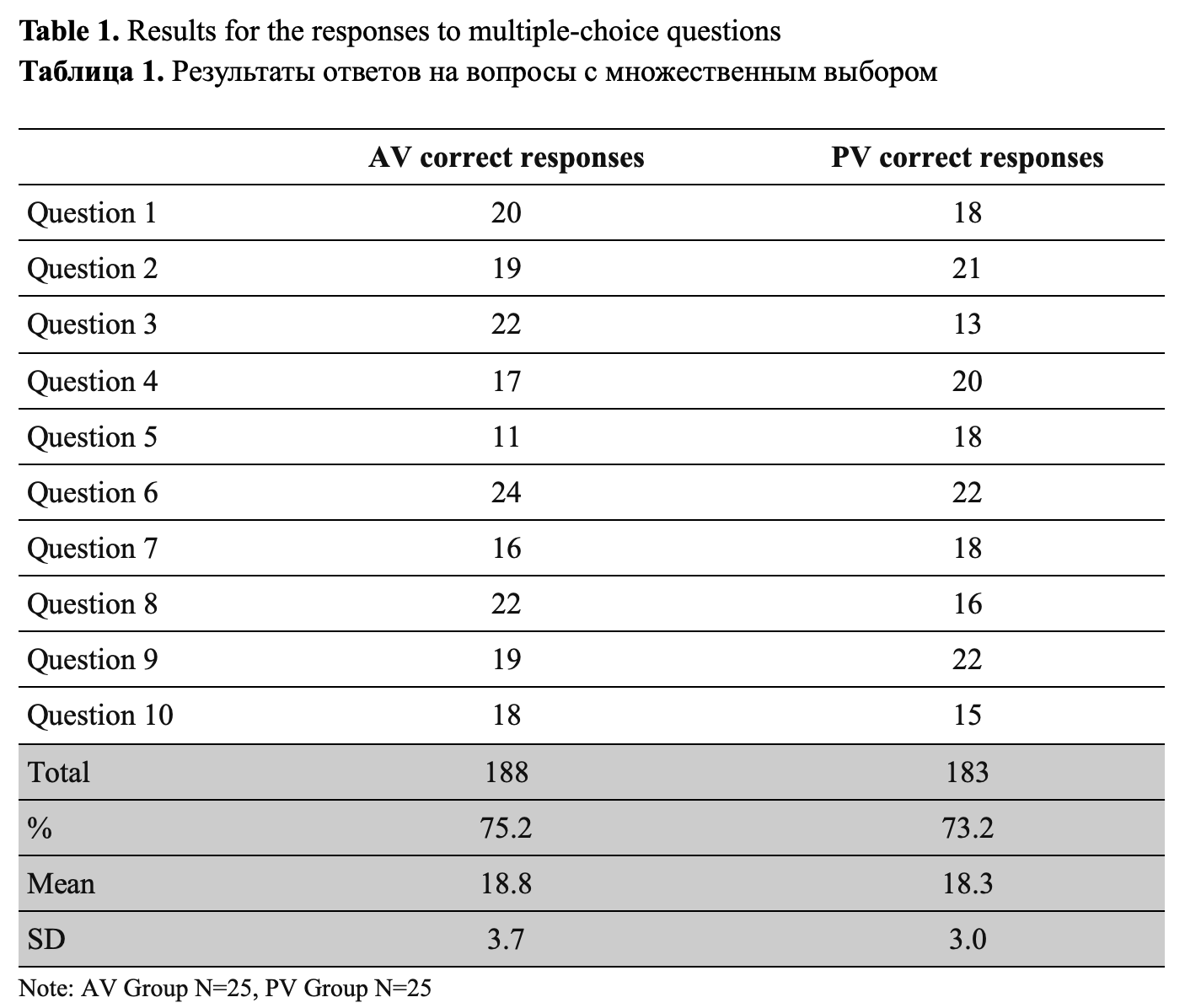

To analyze multiple-choice comprehension questions, the percentage of correct responses was calculated for each group. The results showed that the AV group had the percentage of correct responses of 75.2% (M = 18.8, SD = 3.7), while the PV group had the percentage of correct responses of 73.2% (M = 18.3, SD = 3.0). A t-test was conducted to determine if there was a significant difference in the percentage of correct responses between the two groups. The results revealed no significant difference (p = 0.5962), indicating that the comprehension of multiple-choice questions was similar between the two groups (Table 1).

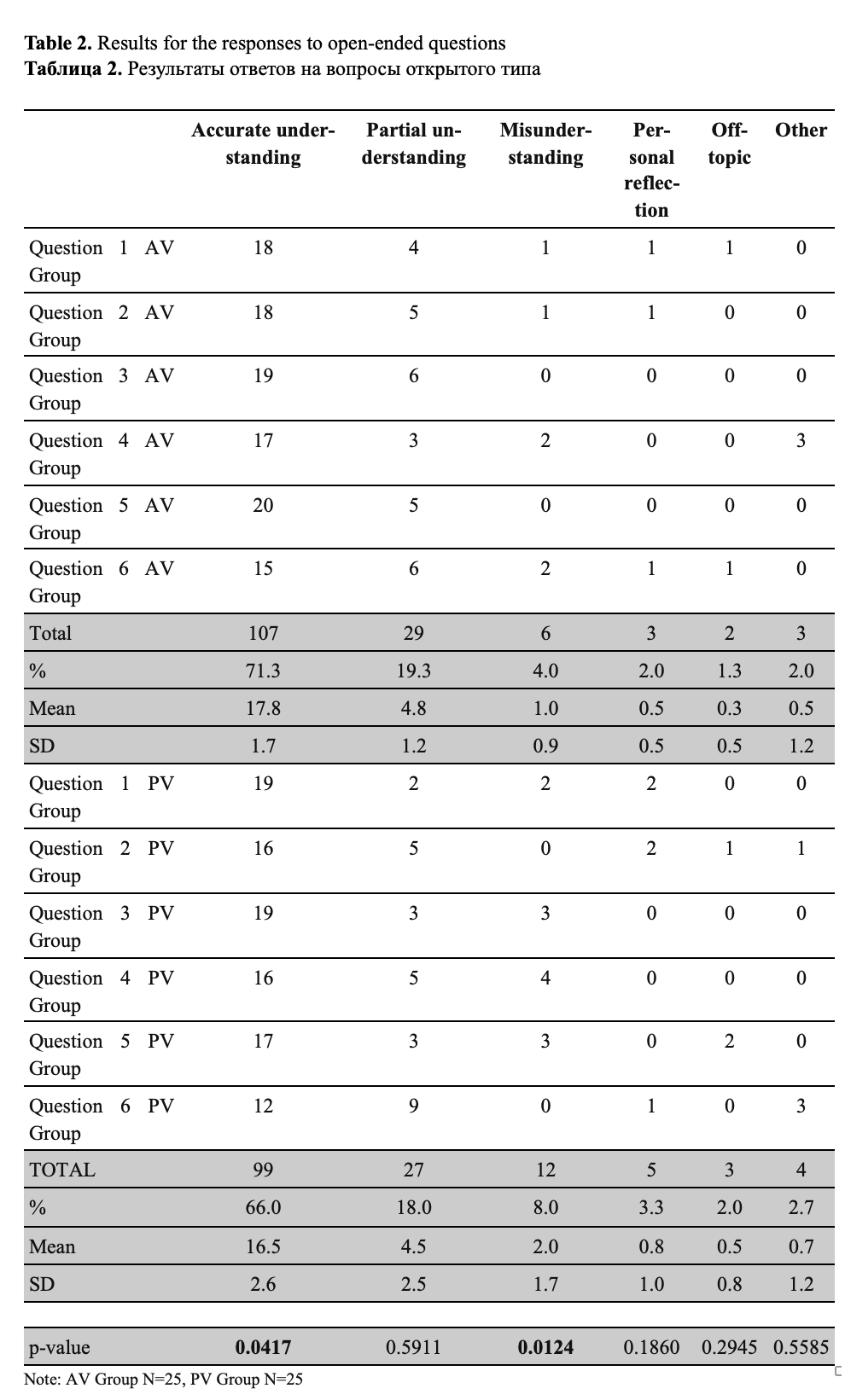

Upon applying the coding system to the open-ended questions, the study categorized the responses for both groups. The results, including the percentages for each category, their means and standard deviations, were calculated for both groups. To determine whether there were any statistically significant differences between the two groups for each category, independent samples t-tests were conducted. The t-tests compared the means of the AV and PV groups for each category, using their means, standard deviations and sample sizes (Table 2).

Using a significance level of 0.05, the results show statistically significant differences between the AV and PV groups in the categories of Accurate Understanding and Misunderstanding. In these categories, the p-values (0.0417 and 0.0124, respectively) are less than the significance level, suggesting that the differences between the two groups are unlikely to be due to random chance.

For the remaining categories (Partial Understanding, Personal Reflection, Off-Topic, and Other), the p-values are greater than the significance level, indicating no significant differences between the AV and PV groups in these categories.

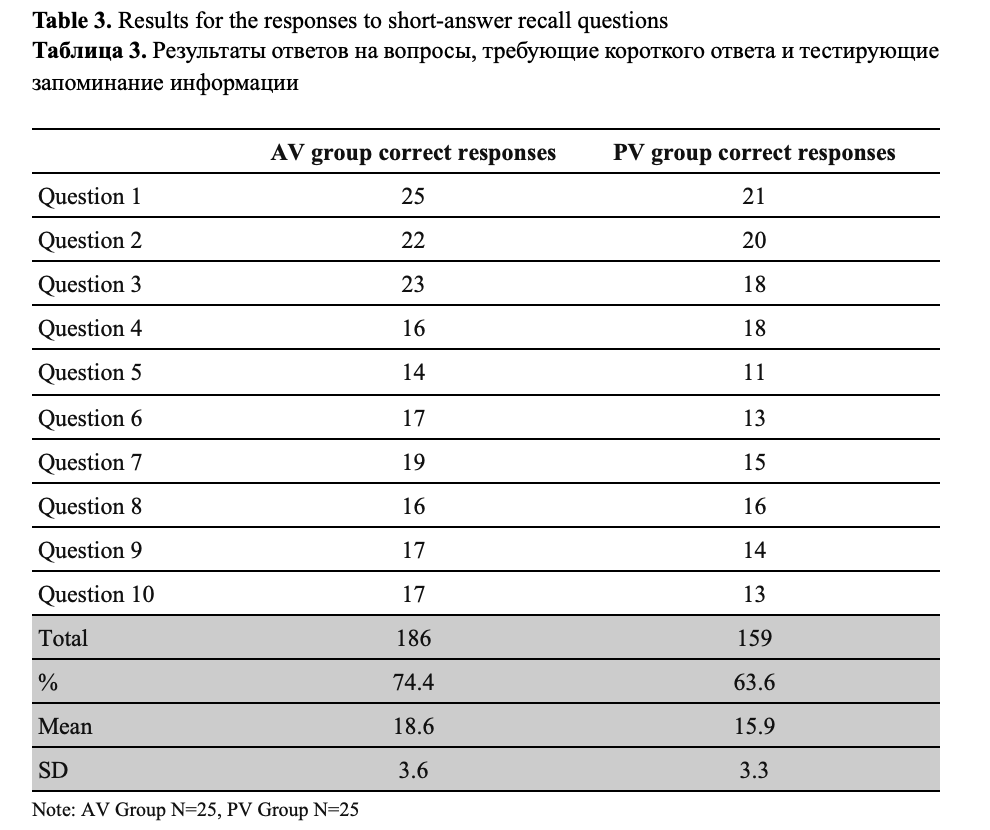

For the short-answer recall questions, the percentage of correct answers for the AV group was 74.4% (M = 18.6, SD = 3.6), while the percentage of correct answers for the PV group was 63.6% (M = 15.9, SD = 3.3).

A t-test was conducted to compare the percentage of correct answers between the two groups (significance level of p = 0.05). The results showed a statistically very significant difference between the AV and PV groups (p = 0.0081), suggesting a much better ability to recall specific details from the article among the AV group respondents (Table 3).

4.3. Post-test survey data results

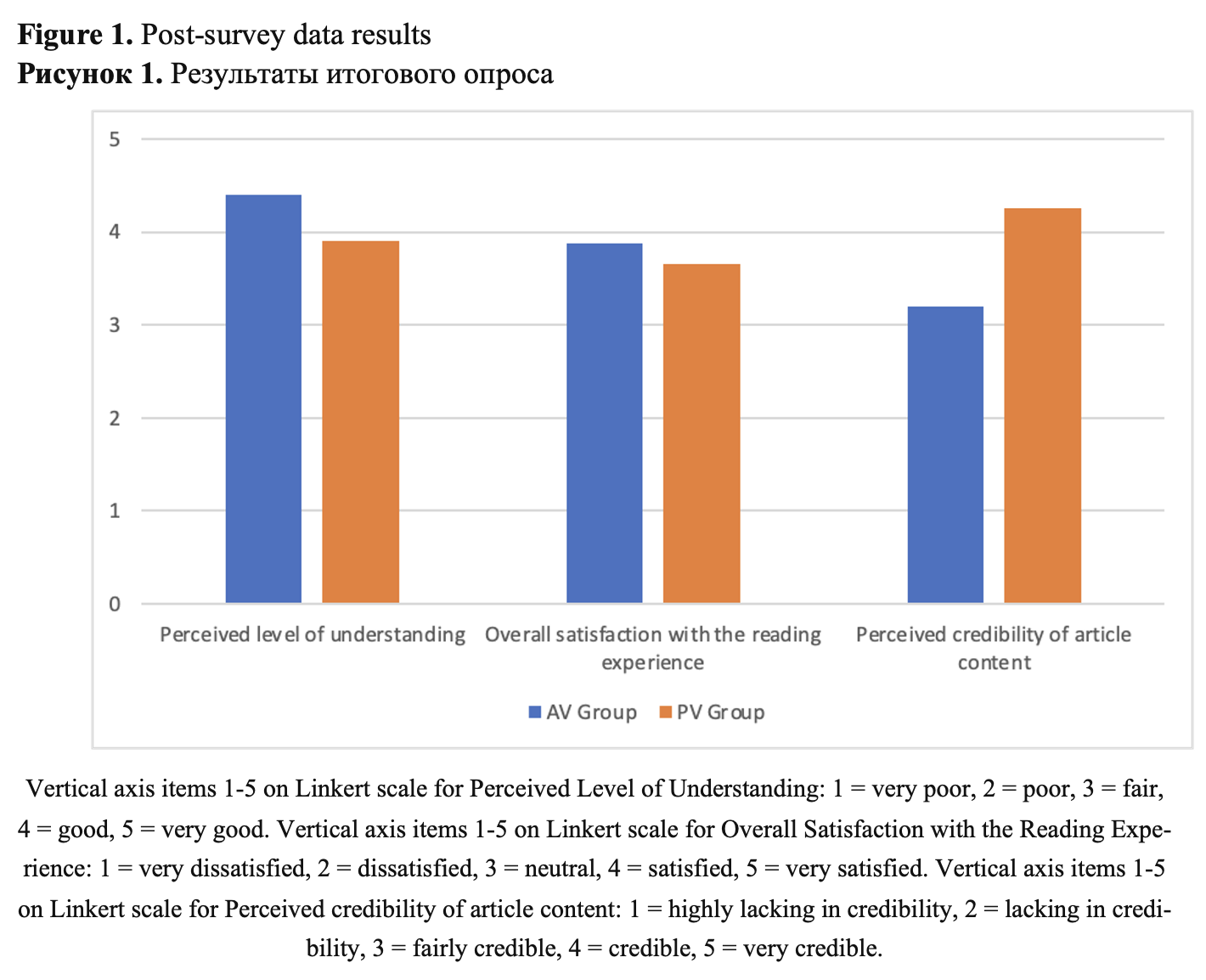

The post-test survey data provided data concerning participants’ perceived level of understanding, overall satisfaction with the reading experience, perceived credibility of the article content, and general preference for language style. The responses to the Likert scale questions were summarized as follows (Figure 1).

Participants rated their level of understanding of the article on a 5-point Likert scale. The AV group had a mean score of 4.4, indicating a relatively high level of understanding. In contrast, the PV group had a mean score of 3.9, which also indicates a relatively good level of understanding, but lower than that of the AV group.

The overall satisfaction with the reading experience was rated by participants on a 5-point Likert scale. The AV group had a mean score of 3.88, suggesting a generally satisfying reading experience. The PV group had a mean score of 3.66, indicating a slightly lower, but still relatively satisfying, reading experience compared to the AV group.

Participants rated the perceived credibility of the article content on a 5-point Likert scale. The PV group had a mean score of 4.26, indicating a relatively high perceived credibility. In contrast, the AV group had a mean score of 3.2, which is a significantly lower lever of perceived credibility compared to the PV group.

Participants’ preferences for language style were not rated group-wise, since it was a general inquiry that looked into respondents’ general preferences outside of this study. Participants predominantly preferred the active voice (42%), with 34% expressing no preference, and 24% preferring the passive voice.

5.DISCUSSION

The study aimed to investigate the influence of active voice and passive voice on the comprehension and recall of information in research articles among readers with varying language proficiency. The findings provide evidence that the choice of voice can indeed affect readers’ comprehension and retention of information.

In the multiple-choice comprehension questions, the results revealed no significant difference in the average percentage of correct responses between the AV and PV groups. This suggests that both active and passive voice structures were similarly effective in conveying the meaning of the text when assessed through multiple-choice questions. It is, however, essential to consider that the nature of multiple-choice questions may inherently limit the depth of comprehension being assessed, as these questions tend to focus more on overall understanding rather than specific details, which might explain the lack of significant differences between the groups in this aspect.

The open-ended questions provided more in-depth data on participants’ comprehension of the research articles. The AV group demonstrated slightly higher and statistically significant scores for accurate understanding compared to the PV group, indicating that the active voice may facilitate better comprehension of the material. Bearing additional evidence for the same conclusion, the PV group demonstrated higher scores for misunderstanding with a statistically significant difference revealed in the results. Although the difference in comprehension between the groups was not extremely substantial, it still suggests that the use of active voice in research articles may lead to improved understanding of the content.

The most pronounced difference between the AV and PV groups was found in the short-answer recall questions, with the AV group scoring significantly higher than the PV group. This finding suggests that the use of active voice in research articles can be correlated with improved retention of specific details. The active voice may be more effective in facilitating recall due to its simpler and more direct sentence structure, which allows readers to focus on the content (particularly, the specific details such as names, dates, figures, or facts) rather than the sentence construction.

The post-test survey data revealed that the AV group reported a higher perceived level of understanding compared to the PV group. This result aligns with the comprehension and recall task findings, further supporting the notion that the active voice may facilitate better comprehension.

The post-test survey data also indicated that participants in the AV group reported slightly higher overall satisfaction with their reading experience compared to the PV group. This finding may be related to the increased understanding and recall observed in the AV group, as well as the general preference for the active voice.

Interestingly, the passive voice was associated with higher perceived credibility of the article content, despite the lower scores in comprehension, recall, and satisfaction. This observation suggests that the passive voice may still hold some perceived authority or prestige in the context of research articles, potentially due to its historical prevalence in scientific writing.

Regarding the preference for language style, the active voice was generally preferred by the pool of participants. This preference may be attributed to the clearer and more direct nature of the active voice, which is often considered more engaging and easier to understand, particularly for non-native speakers. However, it is important to note that preferences varied among participants, and some still preferred the passive voice, while others claimed they had no preference in this regard.

Summing up the key research findings, study results suggest that the active voice was associated with higher perceived understanding and a slightly more satisfying reading experience, while the passive voice was associated with significantly higher perceived credibility of the article content. The most pronounced difference between the AV and PV groups was found in the short-answer recall questions, with the AV group scoring significantly higher than the PV group. The preference for language style showed some variability, but the active voice was generally preferred by study participants.

Based on the study results, we propose three key recommendations for the use of active and passive voice in research articles to enhance comprehension and accessibility.

1. Proposing active voice for enhanced comprehension in complex narratives. In view of the observed findings, and in harmony with previous research that associates active voice with better comprehension (e.g., Tarone et al., 1998; Sigel, 2009), using it may be recommended in instances of complex narratives within research articles. The conceptual complexity of scientific articles can often pose a formidable barrier to comprehension. The dense narratives presented in the form of data analysis, results interpretation, and the drawing of conclusions often necessitate substantial cognitive engagement from the readers.

Our study indicates that the application of active voice can ameliorate the processing of such intricate narratives, promoting comprehension and augmenting the accessibility of scientific content to readers of varying language proficiency. This observation echoes prior research that underscored the efficacy of active voice in enhancing readability and comprehension due to its inherent alignment with our cognitive processing patterns. Thus, research in cognitive narratology, the study of cognitive processes invoked by narratives, emphasizes the natural human tendency to organize experiences into a story format, typically characterized by an “agent-action-object” structure (Tucan, 2013). This structure is inherently aligned with the active voice, suggesting that its use might facilitate intuitive absorption of information by adhering to our cognitive sequencing of events, thereby facilitating better comprehension, especially when dealing with complex narratives (Grishechko, 2023; Zuljan et al., 2021). This is also in line with the “ease of processing” principle in cognitive psychology (Sweller et al., 2019), suggesting that readers are more likely to absorb and retain content that is presented in a manner that minimizes cognitive load.

The proposed recommendation to “lighten” this load by using active voice in complex narratives needs a special highlight given the increasingly global nature of scientific research, whereby clarity in communication appears paramount. Other studies have also corroborated that non-native English speakers who comprise a significant portion of the scientific community find active voice easier to understand and translate (Kotz et al., 2008; Malyuga and McCarthy, 2021). This implies that the use of active voice could increase the global accessibility of complex scientific narratives.

Therefore, we strongly advocate for a deliberate application of active voice in the presentation of complex narratives and conclusions within research articles. This practice, as corroborated by study findings and supporting literature, can significantly enhance the comprehensibility and accessibility of complex scientific content without sacrificing the stylistic nuances and structural requirements of scientific writing. This approach takes into account the balance between complexity of content and readability to offer a more inclusive way of knowledge dissemination.

2. Utilizing active voice for enhanced recall of specific data-driven information. The presentation and interpretation of data-driven information is crucial in scientific writing. This is because scientific research aims to disclose facts about the natural world through observation and experiment, and these observations and experiments are often expressed as data. In order to effectively communicate these facts and interpretations, it is important to present data in a clear, concise, and accurate manner.

Building on the findings of this study and correlating with prior research emphasizing the benefits of simpler syntax in information recall (see e.g., Perham et al., 2009), targeted use of active voice can be advised in presenting specific data, numerical figures, and data-driven details within research articles. This recommendation is predicated on the observed data where participants in the AV group demonstrated a superior capacity in short-answer recall questions, thereby implying a better retention of specific, data-centric information.

This finding most accurately correlates with the established focus of scientific writing towards presenting text and data unambiguously. Specifically, Dunleavy (2003: 114) asserts that the active voice is instrumental in circumventing “avoidable ambiguities”, ramping up the clarity of the conveyed information, and thus facilitating better recall. The clarity and directness inherent to active voice become crucial in such contexts, offering a straightforward, unambiguous narrative of the data and findings. As active voice reduces the cognitive load needed to understand the conveyed information, it can scale up the reader’s retrieval of these specific details, which facilitates superior recall, as evidenced in our study.

3. Implementing passive voice to enhance perceived credibility. Our study findings echo the sentiment of previous research indicating that the utilization of passive voice in scientific articles is often associated with a heightened sense of credibility. Many advocates of the impersonal form consider objectivity a crucial aspect of academia, and this necessitates the use of passive voice, third person, and other impersonal structures (White, 2000). Macmillan and Weyes (2007a) support this argument, emphasizing the importance of maintaining an impersonal tone in scientific writing.

Although some might argue that the active voice is clearer, there is a counterargument that the use of personal pronouns shifts the attention away from the action itself (Macmillan and Weyes, 2007b; Kirilenko, 2024). Moreover, scientific discourse often utilizes the passive voice more than standard English, allowing the focus of the sentence to dictate the appropriate voice (Bailey, 2025).

From a historical perspective, the passive voice has been predominant in scientific writing, as a conventional tool in the rhetoric of science. This is mostly attributed to the third person or passive voice imparting an aura of objectivity and emotional distance, minimizing the appearance of personal bias (Brown, 2006). As a result, the passive voice often enhances the perceived credibility of research articles, not in the least by enabling authors to distance themselves from their work, focusing on the processes and findings rather than the researchers themselves. This detachment conveys objectivity and impartiality, essential traits for establishing credibility in scientific communication.

We argue that these arguments coupled with the results of this study warrant a judicious use of passive voice in sections where credibility is crucial, including the statement of research aims and questions, procedural descriptions in the Materials and Methods section, and the recapitulation of findings in the Discussion and Conclusion sections. In the case of research aims and questions, this mainly has to do with traditional scientific writing conventions, particularly in the natural and social sciences. By conforming to these conventions, researchers can ensure their work is taken seriously and accepted by their peers. In the case of Material and Methods, the general expectation that scientific procedures should be reproducible speaks to the advantage of using passive voice in this section, emphasizing the universal applicability of the methods over the particular actions of the researchers. In relation to the Discussion and Conclusion sections, the use of passive voice can contribute to a sober and reflective tone, motivating a dispassionate interpretation of the findings. This approach attests to the nature of science as a collective, cumulative endeavor, downplaying individual contributions and ego.

We therefore propose the contextual use of passive voice in enhancing the credibility of scientific articles. While this must be balanced against the need for clear and accessible prose, the strategic use of passive voice can effectively bring to the fore the scientific rigor and credibility of the presented research.

In light of the research findings, we have outlined three principal recommendations pertaining to the use of active and passive voice in research articles to increase comprehension and accessibility. It is important to note that these recommendations should not lead to an exclusive preference for one voice over the other. Indeed, a balanced use of both voices can be valuable, with the choice between them being driven by the context and the particular needs of the intended audience. For example, using active voice to describe the overall study design and passive voice to detail specific procedures can combine the strengths of both voices.

Furthermore, recognizing the variability in language style preferences among our study participants, we advocate tailoring the use of active and passive voice based on the audience’s characteristics and needs. When the target audience is broad or includes non-native English speakers, using more active voice can improve clarity and ease of understanding. Conversely, for a specialized audience, passive voice may better convey authority and objectivity.

By incorporating these recommendations into the writing and editing of research articles, authors and editors can help make scientific content more accessible, engaging, and comprehensible for a diverse audience, including non-native English speakers. This, in turn, will help expedite greater inclusivity and collaboration within the global scientific community.

In addition to the issue of grammatical voice, the study offers some initial understanding of how stylistic form could affect the reader's degree of cognitive engagement with scientific writings. Although there were no specific psychometric measures or observational procedures used to gauge cognitive engagement, the comprehension tasks’ design was purposefully in line with accepted engagement typologies. Tasks requiring little effort, like multiple-choice questions, are typically linked to passive or surface-level involvement, where information is absorbed but not transformed, according to the ICAP model (Chi & Wylie, 2014). On the other hand, because they require readers to recover, rebuild, or restate content, open-ended and recall-based assignments promote active and productive types of involvement.

The AV group’s performance indicates that using active voice may promote deeper engagement with the text in addition to improved memory, especially in the open-ended summary and short-answer recall parts. This indicates the mental effort readers put in to navigate sentence structure, agency, and information flow rather than just processing ease. Active formulations tend to map more clearly onto mental representations, which facilitates retention, whereas passive constructs may hinder syntactic transparency or hide the actor. Because their comprehension may be more sensitive to departures from the standard clause structure, non-native speakers should pay particular attention to this.

Without requiring introspective reporting, the study offers an indirect way to observe reader engagement by embedding tasks along a continuum of cognitive effort. This method provides a means of evaluating how language characteristics influence both what is understood and the cognitive construction of understanding. In this way, the study addresses the issue of how various linguistic forms need distinct kinds of mental work when reading rather than only looking at comprehension results.

While the present study provides valuable observations concerning the influence of voice on comprehension and recall among readers of various levels of language proficiency, some limitations should be acknowledged. The sample size was relatively small (N = 50), and future research could benefit from recruiting larger samples. Additionally, the study only included one research article for each voice group, which may not fully capture the range of potential effects. Future research could include multiple research articles with varying topics and writing styles to assess the consistency of the observed effects. It could also explore other factors that may influence comprehension and perception of research articles, such as content familiarity or the role of visuals. Longitudinal studies might also investigate the long-term effects of exposure to active and passive voice in research articles on language development and understanding of research content among readers of different language proficiency levels. Individual cognitive abilities like working memory, which would have affected recall results apart from linguistic voice, were not taken into account in this study. To account for individual heterogeneity, mixed-effects models and cognitive tests would be useful in future studies.

Although the observed differences between the AV and PV groups in the “Accurate understanding” (original p = 0.0417) and “Misunderstanding” (original p = 0.0124) categories initially reached conventional significance thresholds (p < 0.05), these effects did not remain statistically significant after applying the Benjamini-Hochberg False Discovery Rate (FDR) correction for multiple comparisons. The adjusted p-values were 0.1251 and 0.0744, respectively – values that, while not meeting the strict cutoff, remain relatively close to the conventional α = 0.05 threshold. Given the limited sample size and the exploratory scope of this study, these results should be interpreted with caution. However, the consistent pattern of group differences across categories suggests potentially meaningful trends that merit further investigation in a study with greater statistical power and a more targeted design.

Despite the limitations, the results of this study have important implications for researchers, editors, and educators. Encouraging thoughtful use of active and passive voice in research articles may improve comprehension and accessibility for non-native English speakers, while maintaining credibility of research findings, thus promoting a more inclusive scientific community.

6.CONCLUSION

The study aimed to explore the impact of active and passive voice on the comprehension of research articles among readers with varying language proficiency levels. This investigation is particularly relevant in the context of increasing globalization and the growing importance of accessible scientific communication, as it seeks to explore how language style can influence the understanding and interpretation of research findings.

To address this aim, the study employed a pre-test survey, a reading comprehension task consisting of multiple-choice questions, open-ended questions, and short-answer recall questions, as well as a post-test survey. Participants were divided into two groups, one exposed to an article written in active voice (AV group) and the other exposed to the same article in passive voice (PV group). The methodology allowed for an in-depth analysis of comprehension, perceived understanding, satisfaction with the reading experience, perceived credibility of the article content, and preference for language style.

The key findings of the study can be summarized as follows.

1. The AV group demonstrated better overall comprehension, particularly in the short-answer recall questions, where they scored significantly higher than the PV group.

2. The AV group reported higher perceived understanding and a more satisfying reading experience, suggesting that active voice contributes to a clearer and more engaging presentation of research content.

3. The PV group perceived the article content as more credible, indicating that passive voice may convey a sense of authority and objectivity in certain contexts.

4. The active voice was generally preferred by participants, although a third of the respondents claimed they had no preference in this matter.

Based on these data, the study proposed three key recommendations for the use of active and passive voice in research articles for better comprehension and accessibility: (1) using active voice for enhanced comprehension in complex narratives; (2) active voice for enhanced recall of specific data-driven information; and (3) implementing passive voice to enhance perceived credibility. These findings have significant implications for researchers, editors, and the broader scientific community. First, they point to the importance of striking a balance between the use of active and passive voice in research articles to optimize comprehension and accessibility for diverse readers, including non-native English speakers and researchers from various disciplinary backgrounds. Second, the study exposes the need for strategic use of language style, with active voice enhancing comprehension of data-centric information, and passive voice conveying authority and objectivity when necessary. The findings also emphasize the role of the target audience in shaping language style choices, as authors should consider tailoring their use of active and passive voice based on the intended readership.

Although the observed differences between the AV and PV groups in the “Accurate understanding” (original p = 0.0417) and “Misunderstanding” (original p = 0.0124) categories initially reached conventional significance thresholds (p < 0.05), these effects did not remain statistically significant after applying the Benjamini-Hochberg False Discovery Rate (FDR) correction for multiple comparisons. The adjusted p-values were 0.1251 and 0.0744, respectively—values that, while not meeting the strict cutoff, remain relatively close to the conventional α = 0.05 threshold. Given the limited sample size and the exploratory scope of this study, these results should be interpreted with caution. However, the consistent pattern of group differences across categories suggests potentially meaningful trends that merit further investigation in a study with greater statistical power and a more targeted design.

Although evaluating the effect of voice on textual comprehension is the study’s primary goal, the results also suggest more general cognitive ramifications. The study addresses the issue of how linguistic form influences the depth of cognitive processing by designing tasks to elicit varying degrees of reader effort, from recognition to recall and synthesis. Active voice usage seems to encourage more laborious forms of interaction, making it easier for readers to extract, remember, and reassemble information. These task-based indicators align with what learning theory defines as active and constructive engagement. This multi-layered approach, which combines cognitive function with linguistic form, paves the way for future research into how language choices in scientific writing can either enhance or limit the reader’s ability to interact meaningfully with difficult content.

This study does rely conceptually on the plain language movement. Importantly, however, it does so not in terms of general-public outreach, but as a framework for improving cognitive accessibility of scientific writing among readers with varying levels of language proficiency – particularly non-native speakers and early-career researchers.

In summary, this study has explicated the complex reciprocity between language style and comprehension in research articles. Its findings contribute to a better understanding of how active and passive voice can influence reader engagement, understanding, and perceptions of credibility, offering actionable recommendations for authors and editors seeking to increase the clarity and impact of their scientific communication. By applying the proposed recommendations, the scientific community can work towards making research more accessible and inclusive, which will ultimately work towards promoting the exchange of ideas and the advancement of knowledge across disciplines and borders.

Благодарности

Публикация выполнена в рамках Проекта № 061011-0-000 Системы грантовой поддержки научных проектов РУДН.

Список литературы

Akopova A. S. English for Specific Purposes: tailoring English language instruction for history majors // Training, Language and Culture. 2023. Vol. 7(3). Pp. 31–40. https://dx.doi.org/10.22363/2521-442X-2023-7-3-31-40

Bailey S. Academic writing: a handbook for international students. London: Routledge, 2025. 320 p. https://doi.org/10.4324/9781003509264

Bailin A., Grafstein A. The linguistic assumptions underlying readability formulae: Aa critique // Language & Communication. 2001. Vol. 21(3). Pp. 285–301. https://dx.doi.org/10.1016/S0271-5309(01)00005-2

Balashov E., Pasichnyk I., Kalamazh R. (2021). Metacognitive awareness and academic self-regulation of HEI students // International Journal of Cognitive Research in Science, Engineering and Education (IJCRSEE). Vol. 9(2). Pp. 161–172. https://doi.org/10.23947/2334-8496-2021-9-2-161-172

Bonsall S. B., Leone A. J., Miller B. P., Rennekamp K. A plain English measure of financial reporting readability // Journal of Accounting and Economics. 2017. Vol. 63(2-3). Pp. 329–357. https://dx.doi.org/10.1016/j.jacceco.2017.03.002

Brown R. B. Doing your dissertation in business and management: the reality of researching and writing. London: SAGE Publications, 2006. 121 p.

Brysbaert M. How many words do we read per minute? A review and meta-analysis of reading rate // Journal of Memory and Language. 2019. Vol. 109. P. 104047. https://dx.doi.org/10.1016/j.jml.2019.104047

Cheung Y. L., Lau L. Authorial voice in academic writing // Ibérica. 2020. Vol. 39. Pp. 215–242. https://dx.doi.org/10.17398/2340-2784.39.215

Chi M. T., Wylie R. The ICAP framework: linking cognitive engagement to active learning outcomes // Educational Psychologist. 2014. Vol. 49. Pp. 219-243. http://dx.doi.org/10.1080/00461520.2014.965823

Ding D. D. The passive voice and social values in science // Journal of Technical Writing and Communication. 2002. Vol. 32(2). Pp. 137–154. https://dx.doi.org/10.2190/EFMR-BJF3-CE41-84KK

Dunleavy P. Authoring a PhD: How to plan, draft, write and finish a doctoral thesis or dissertation. London: Palgrave, 2003. 297 p.

Erdemir F. How to write a materials and methods section of a scientific article? // Turkish Journal of Urology. 2013. Vol. 39(Suppl 1). Pp. 10–15. https://dx.doi.org/10.5152/tud.2013.047

Ferreira F. In defense of the passive voice // American Psychologist. 2021. Vol. 76(1). Pp. 145–153. https://dx.doi.org/10.1037/amp0000620

Grishechko E. G. Language and cognition behind simile construction: a Python-powered corpus research // Training, Language and Culture. 2023. Vol. 7(2). Pp. 80–92. https://dx.doi.org/10.22363/2521-442X-2023-7-2-80-92

Hudson R. The struggle with voice in scientific writing // Journal of Chemical Education. 2013. Vol. 90(12). P. 1580. https://dx.doi.org/10.1021/ed400243b

Inzunza E. R. Reconsidering the use of the passive voice in scientific writing // The American Biology Teacher. 2020. Vol. 82(8). Pp. 563–565. https://dx.doi.org/10.1525/abt.2020.82.8.563

Kaphingst K. A., Kreuter M. W., Casey C., Leme L., Thompson T., Cheng M. R., Jacobsen H., Sterling R., Oguntimein J., Filler C., Culbert A., Rooney M., Lapka C. Health Literacy INDEX: development, reliability, and validity of a new tool for evaluating the health literacy demands of health information materials // Journal of Health Communication. 2012. Vol. 17(sup3). Pp. 203–221. https://dx.doi.org/10.1080/10810730.2012.712612

Kerwer M., Chasiotis A., Stricker J., Günther A., Rosman T. Straight from the scientist’s mouth: plain language summaries promote laypeople’s comprehension and knowledge acquisition when reading about individual research findings in psychology // Collabra: Psychology. 2021. Vol. 7(1). P. 18898. https://dx.doi.org/10.1525/collabra.18898

Kotz S. A., Holcomb P. J., Osterhout L. ERPs reveal comparable syntactic sentence processing in native and non-native readers of English // Acta Psychologica. 2008. Vol. 128(3). Pp. 514–527. https://dx.doi.org/10.1016/j.actpsy.2007.10.003

Leong A. P. The passive voice in scientific writing through the ages: a diachronic study // Text & Talk. 2020. Vol. 40(4). Pp. 467–489. https://dx.doi.org/10.1515/text-2020-2066

Leskelä L., Mustajoki A., Piehl A. Easy and plain languages as special cases of linguistic tailoring and standard language varieties // Nordic Journal of Linguistics. 2022. Vol. 45(2). Pp. 194–213. https://dx.doi.org/10.1017/S0332586522000142

Macmillan K., Weyes J. How to write essays and assignments. London: Pearson Education, 2007a. 246 p.

Macmillan K., Weyes J. How to write dissertations and project reports. London: Pearson Education/Prentice Hall, 2007b. 286 p.

Malyuga E. N., McCarthy M. “No” and “net” as response tokens in English and Russian business discourse: in search of a functional equivalence // Russian Journal of Linguistics. 2021. Vol. 25(2). Pp. 391–416. https://dx.doi.org/10.22363/2687-0088-2021-25-2-391-416

Minton T. D. In defense of the passive voice in medical writing // The Keio Journal of Medicine. 2015. Vol. 64(1). Pp. 1–10. https://dx.doi.org/10.2302/kjm.2014-0009-RE

Perham N., Marsh J. E., Jones D. M. Short article: syntax and serial recall: How language supports short-term memory for order // Quarterly Journal of Experimental Psychology. 2009. Vol. 62(7). Pp. 1285–1293. https://dx.doi.org/10.1080/17470210802635599

Plavén-Sigray P., Matheson G. J., Schiffler B. C., Thompson W. H. The readability of scientific texts is decreasing over time // eLife. 2017. Vol. 6. P. e27725. https://dx.doi.org/10.1101/119370

Schriver K. A. On developing plain language principles and guidelines / Ed. Hallik K., Harrison Whiteside K. // Clear communication: a brief overview. Tallinn: Institute of the Estonian Language, 2014. Pp. 55–69.

Sigel T. How passive voice weakens your scholarly argument // Journal of Management Development. 2009. Vol. 28(5). Pp. 478–480. https://dx.doi.org/10.1108/02621710910955994

Solomon E. D., Mozersky J., Wroblewski M. P., Baldwin K., Parsons M. V., Goodman M., DuBois J. M. Understanding the use of optimal formatting and plain language when presenting key information in clinical trials // Journal of Empirical Research on Human Research Ethics. 2022. Vol. 17(1-2). Pp. 177–192. https://dx.doi.org/10.1177/15562646211037546

Staples S., Egbert J., Biber D., Gray B. Academic writing development at the university level: phrasal and clausal complexity across level of study, discipline, and genre // Written Communication. 2016. Vol. 33(2). Pp. 149–183. https://dx.doi.org/10.1177/0741088316631527

Stoll M., Kerwer M., Lieb K., Chasiotis A. Plain language summaries: a systematic review of theory, guidelines and empirical research // Plos One. 2022. Vol. 17(6). Pp. e0268789. https://dx.doi.org/10.1371/journal.pone.0268789

Sweller J., van Merriënboer J. J., Paas F. Cognitive architecture and instructional design: 20 years later // Educational Psychology Review. 2019. Vol. 31. Pp. 261–292. https://dx.doi.org/10.1023/a:1022193728205

Tamimy M., Setayesh Zarei L., Khaghaninejad M. S. Collectivism and individualism in US culture: an analysis of attitudes to group work // Training, Language and Culture. 2022. Vol. 6(2). Pp. 20-34. https://doi.org/10.22363/2521-442X-2022-6-2-20-34

Tarone E., Dwyer S., Gillette S., Icke V. On the use of the passive and active voice in astrophysics journal papers: with extensions to other languages and other fields // English for Specific Purposes, 1998. Vol. 17(1). Pp. 113–132. https://dx.doi.org/10.1016/S0889-4906(97)00032-X

Temnikova I., Vieweg S., Castillo C. The case for readability of crisis communications in social media // Proceedings of the 24th International Conference on World Wide Web. Florence, Italy: Association for Computing Machinery, 2015. Pp. 1245–1250. https://dx.doi.org/10.1145/2740908.2741718

Tucan G. The reader’s mind beyond the text: the science of cognitive narratology // Romanian Journal of English Studies. 2013. Vol. 10(1). Pp. 302–311. https://dx.doi.org/10.2478/rjes-2013-0029

Turfler S. Language ideology and the plain-language movement: how straight-talkers sell linguistic myths // Legal Communication & Rhetoric. 2015. Vol. 12. Pp. 195–218.

Wanner A. Deconstructing the English passive. Berlin: Mouton de Gruyter, 2009. 230 p.

White B. Dissertation skills for business and management students. London: Thompson Learning, 2000. 168 p.

Yousef H., Karimi L., Janfeshan K. The relationship between cultural background and reading comprehension // Theory & Practice in Language Studies. 2014. Vol. 4(4). Pp. 707–714. https://dx.doi.org/10.4304/tpls.4.4.707-714

Zarcadoolas C. The simplicity complex: exploring simplified health messages in a complex world // Health Promotion International. 2011. Vol. 26(3). Pp. 338–350. https://dx.doi.org/10.1093/heapro/daq075

Zuljan D., Valenčič Zuljan M., Pejić Papak P. Cognitive constructivist way of teaching scientific and technical contents // International Journal of Cognitive Research in Science, Engineering and Education (IJCRSEE). 2021. Vol. 9(1). Pp. 23–36. https://doi.org/10.23947/2334-8496-2021-9-1-23-36