A deep learning method based on language models for processingnatural language Russian commands in human robot interaction

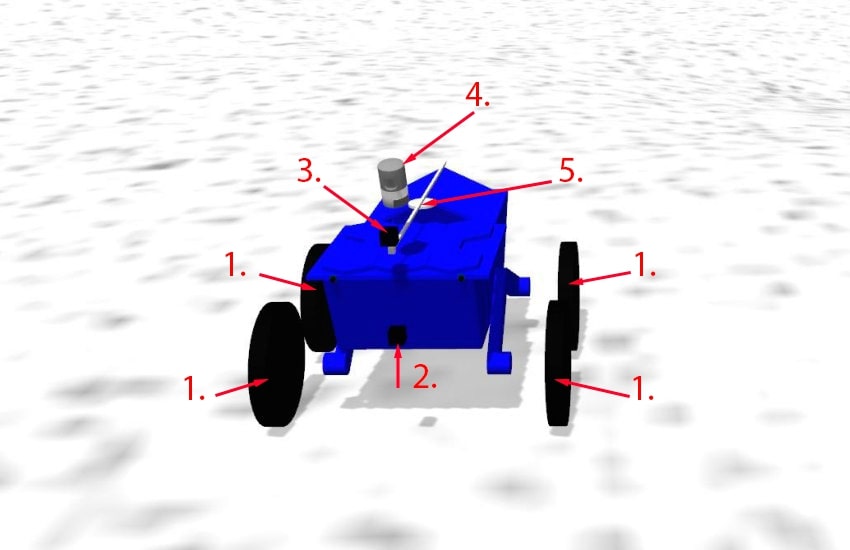

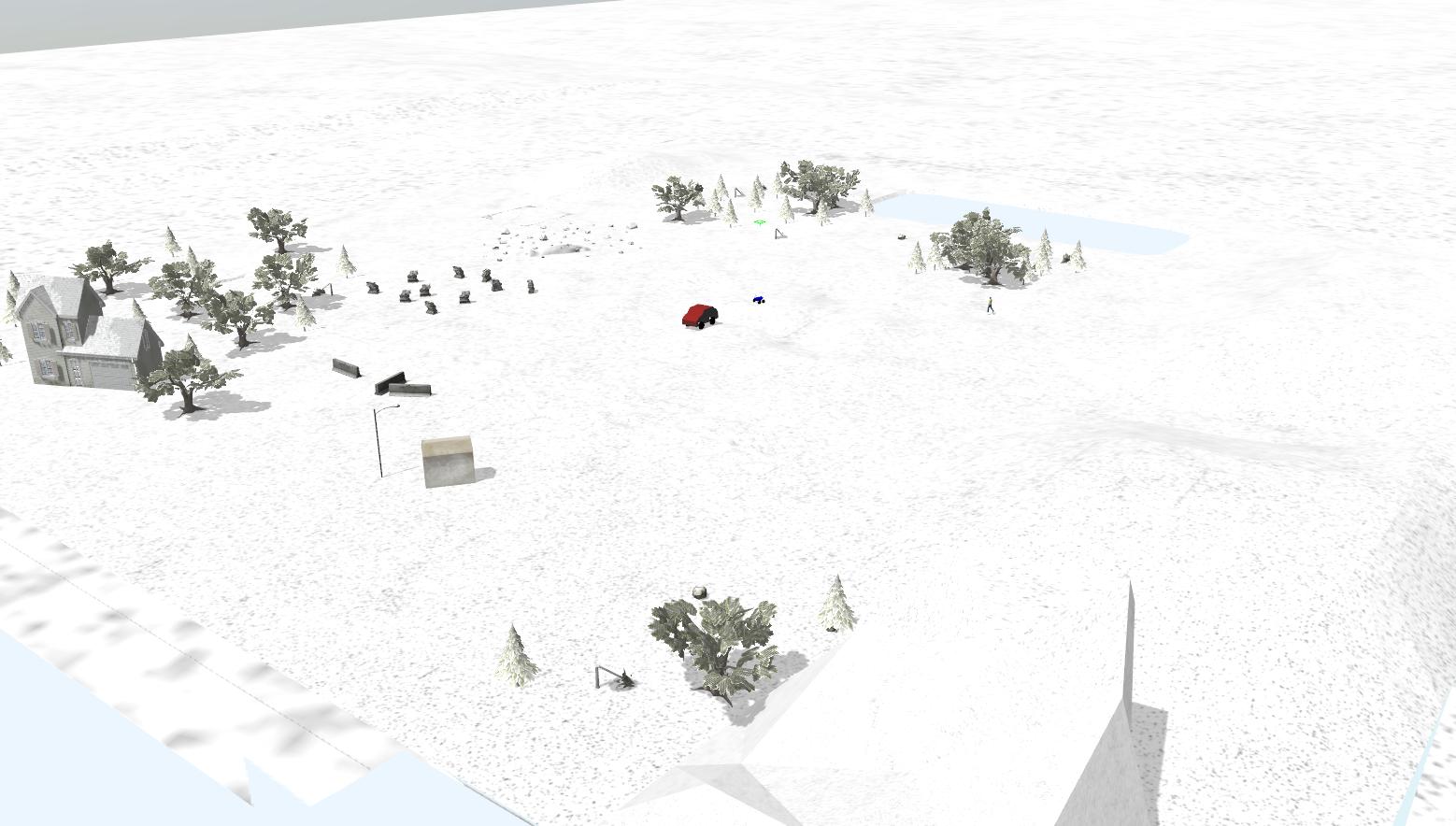

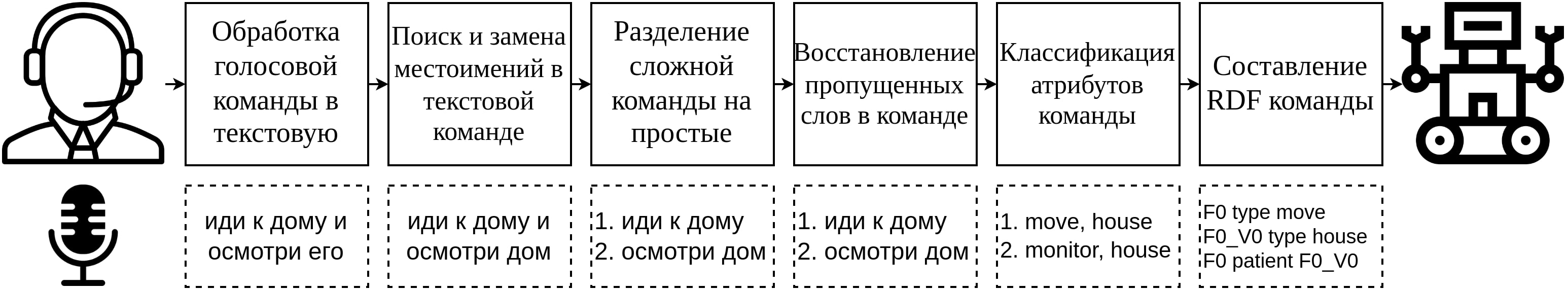

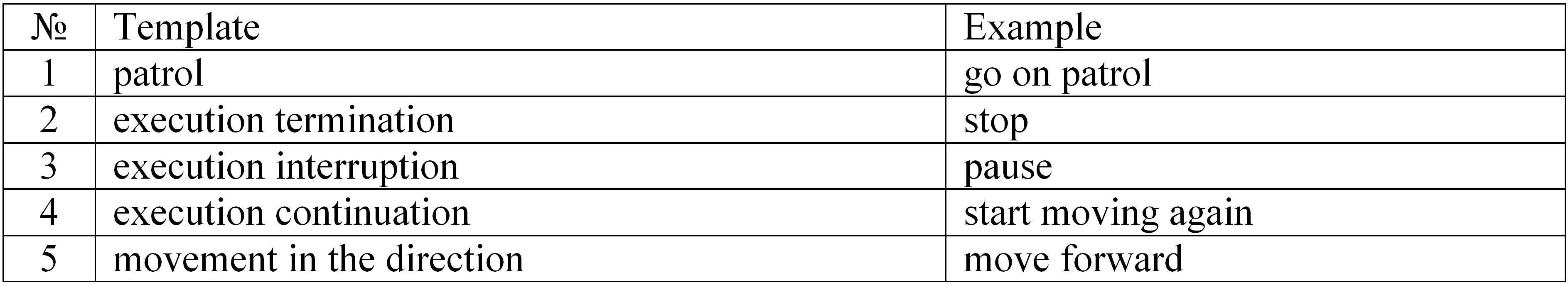

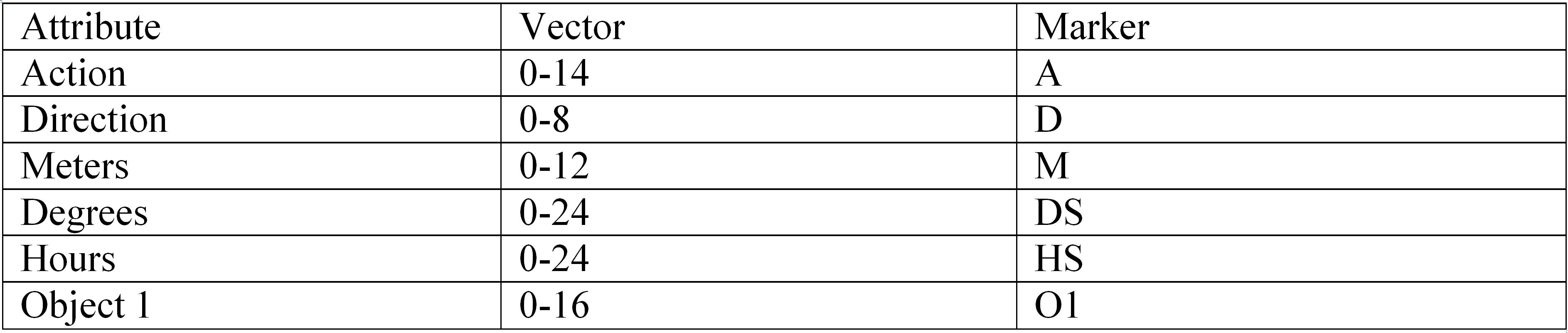

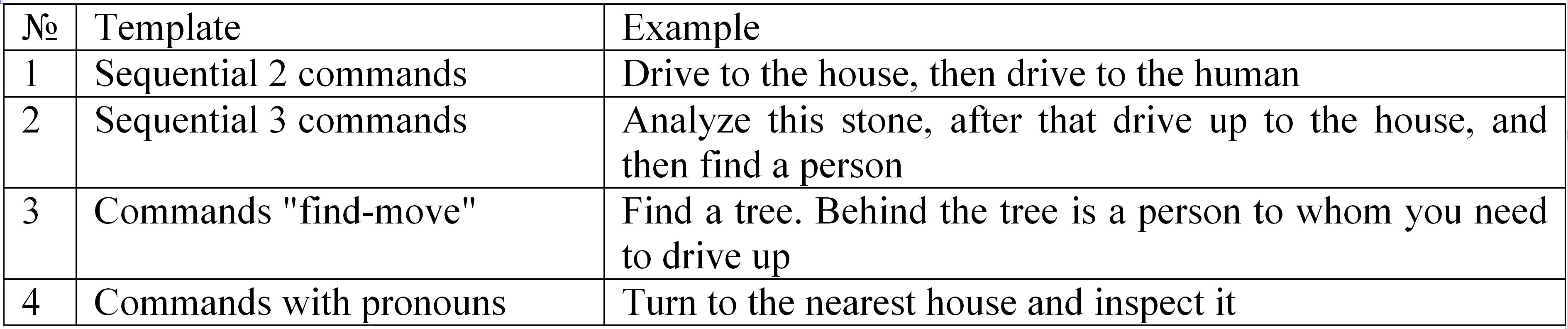

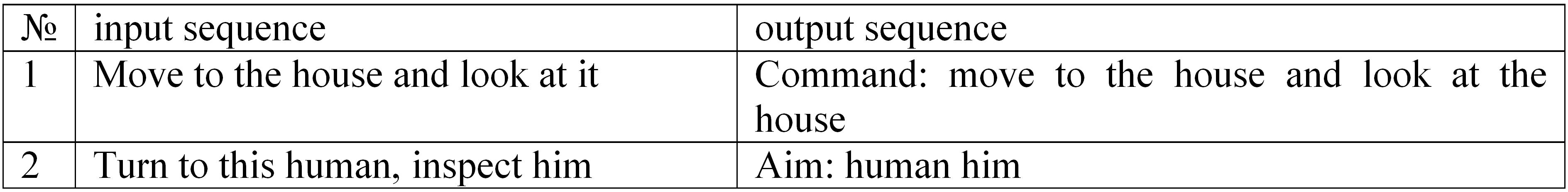

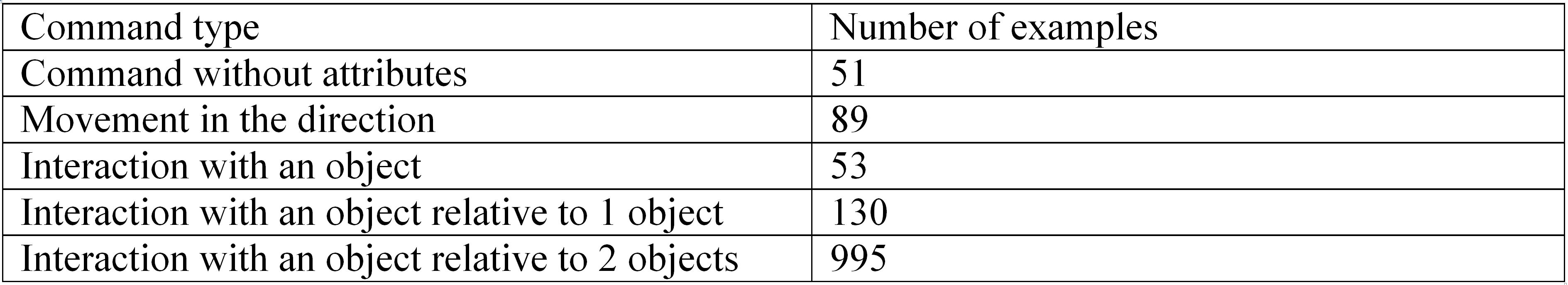

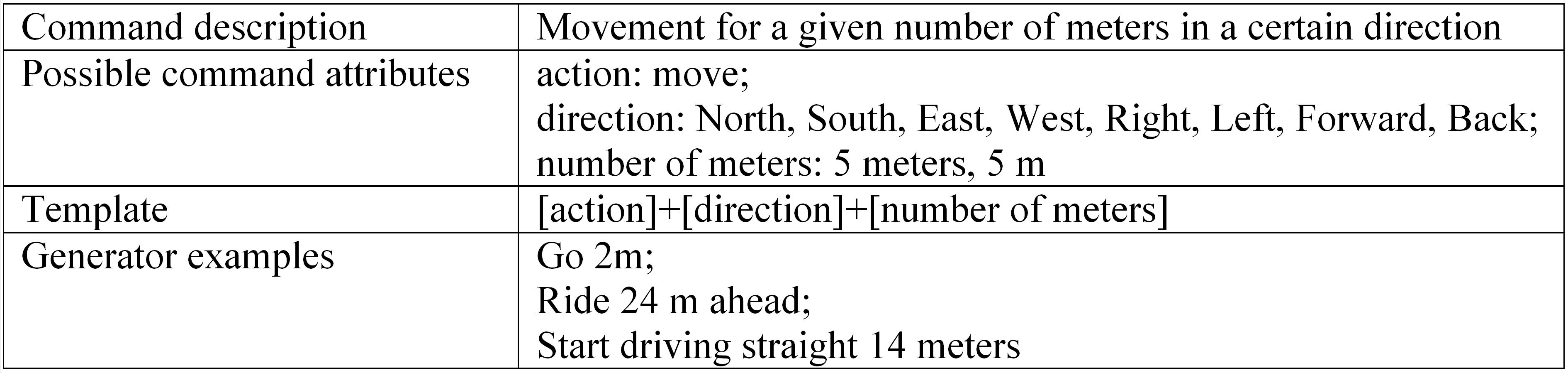

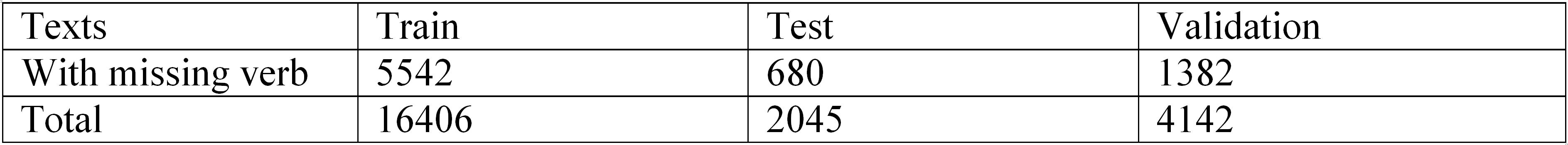

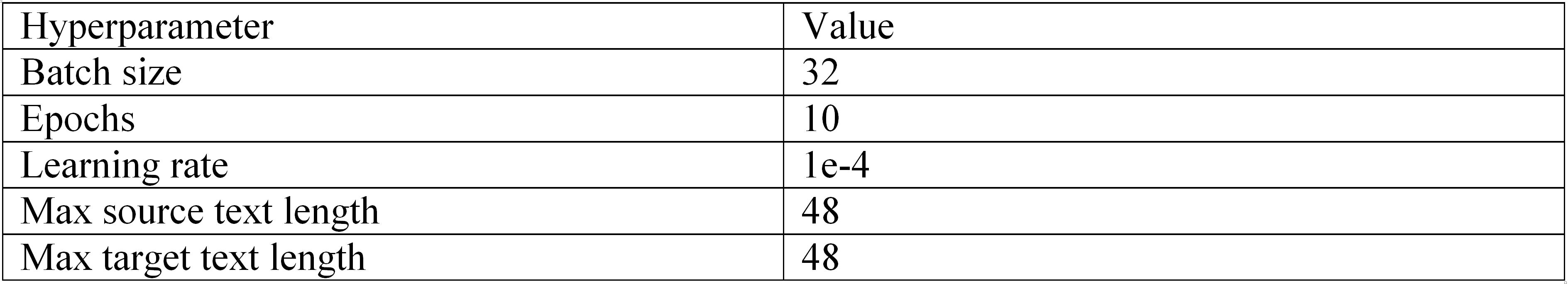

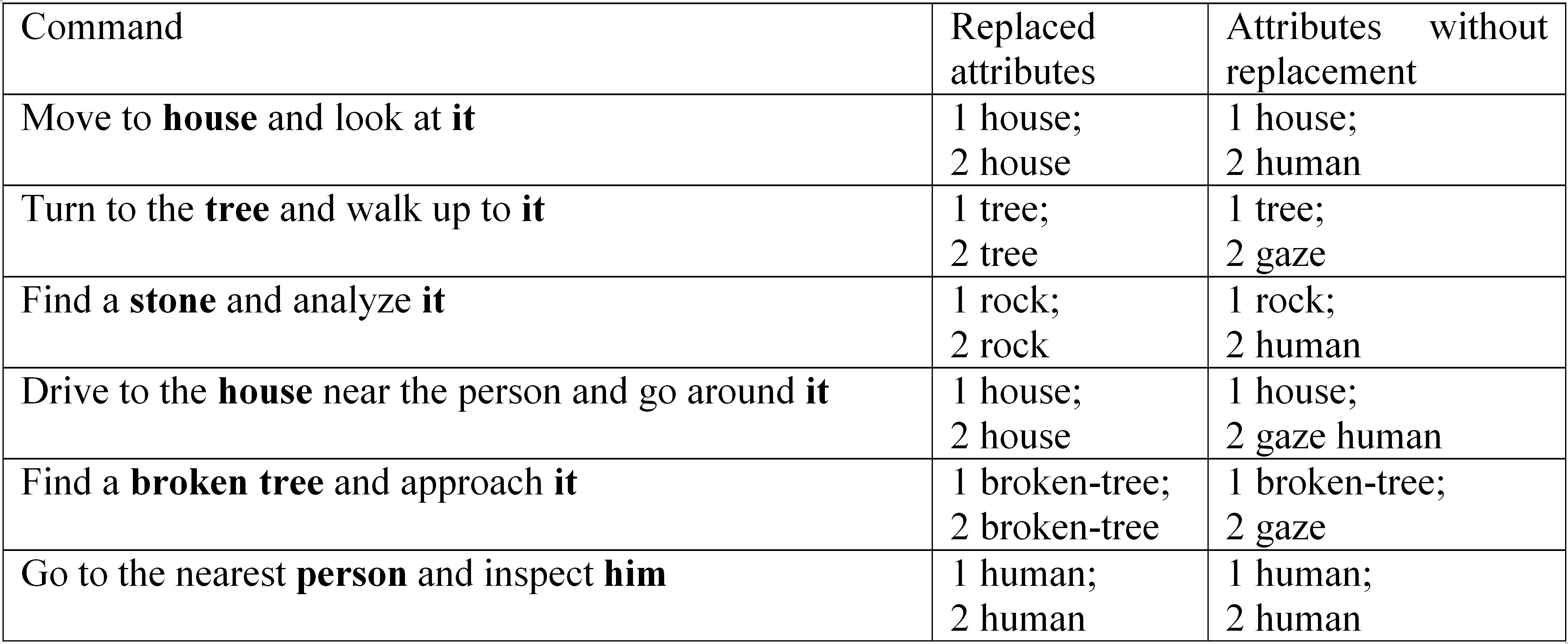

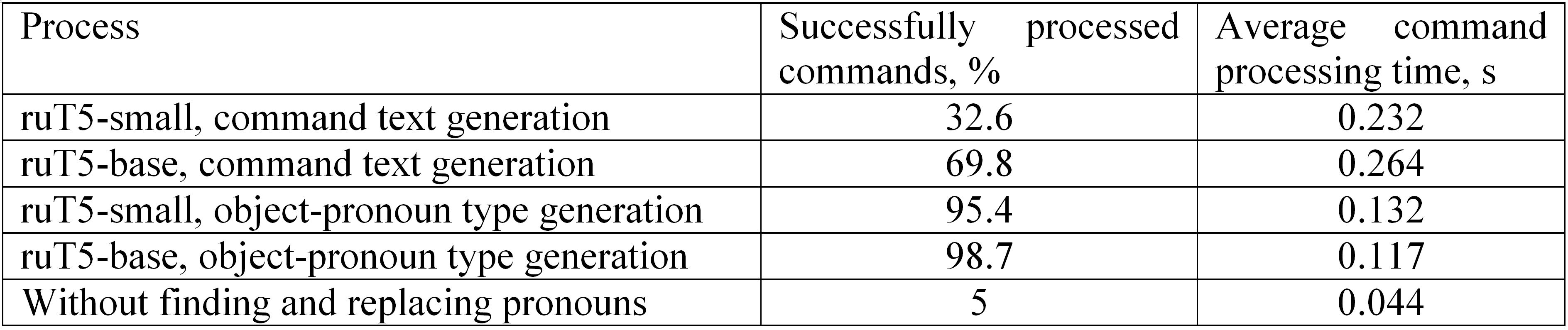

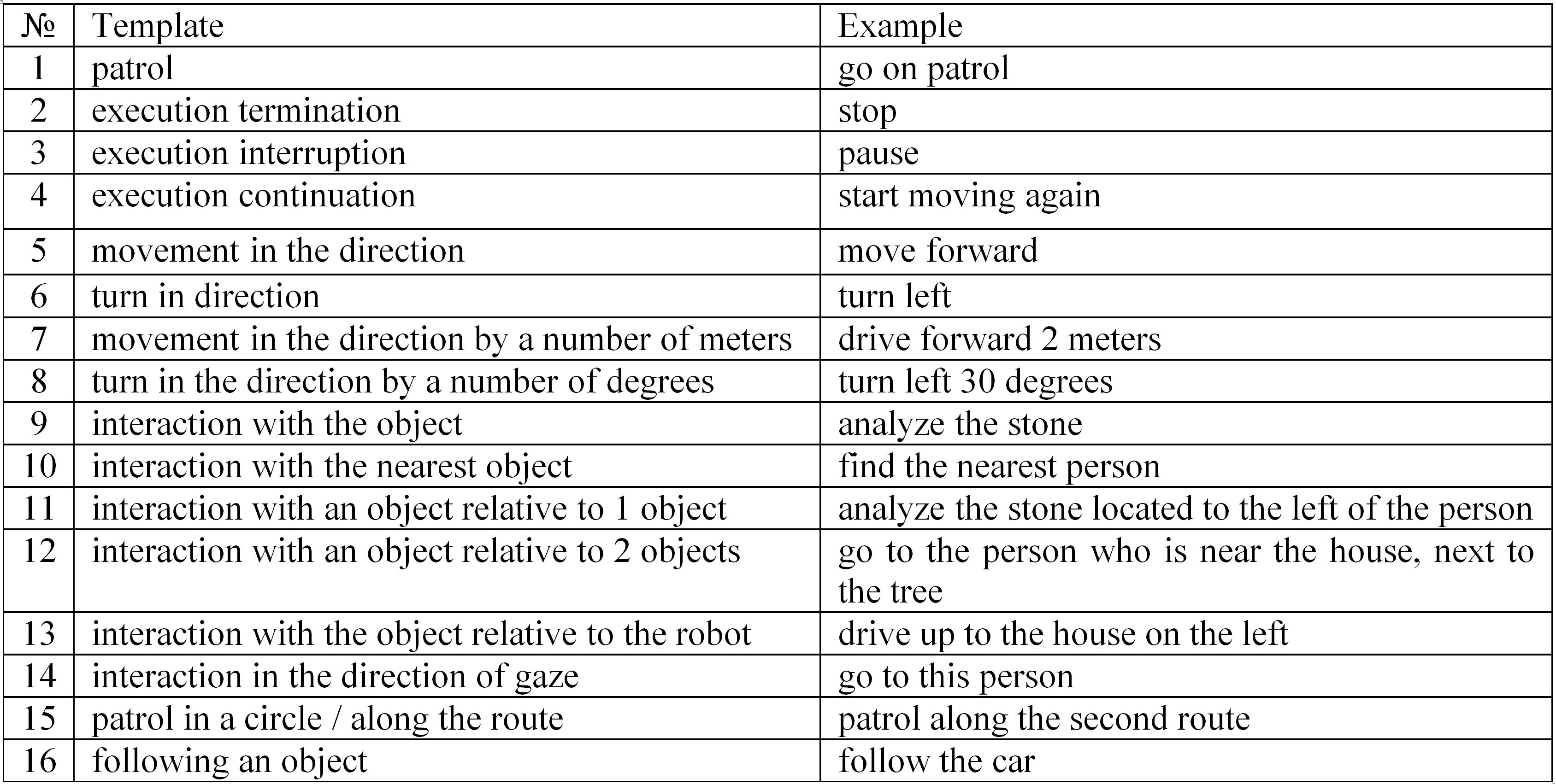

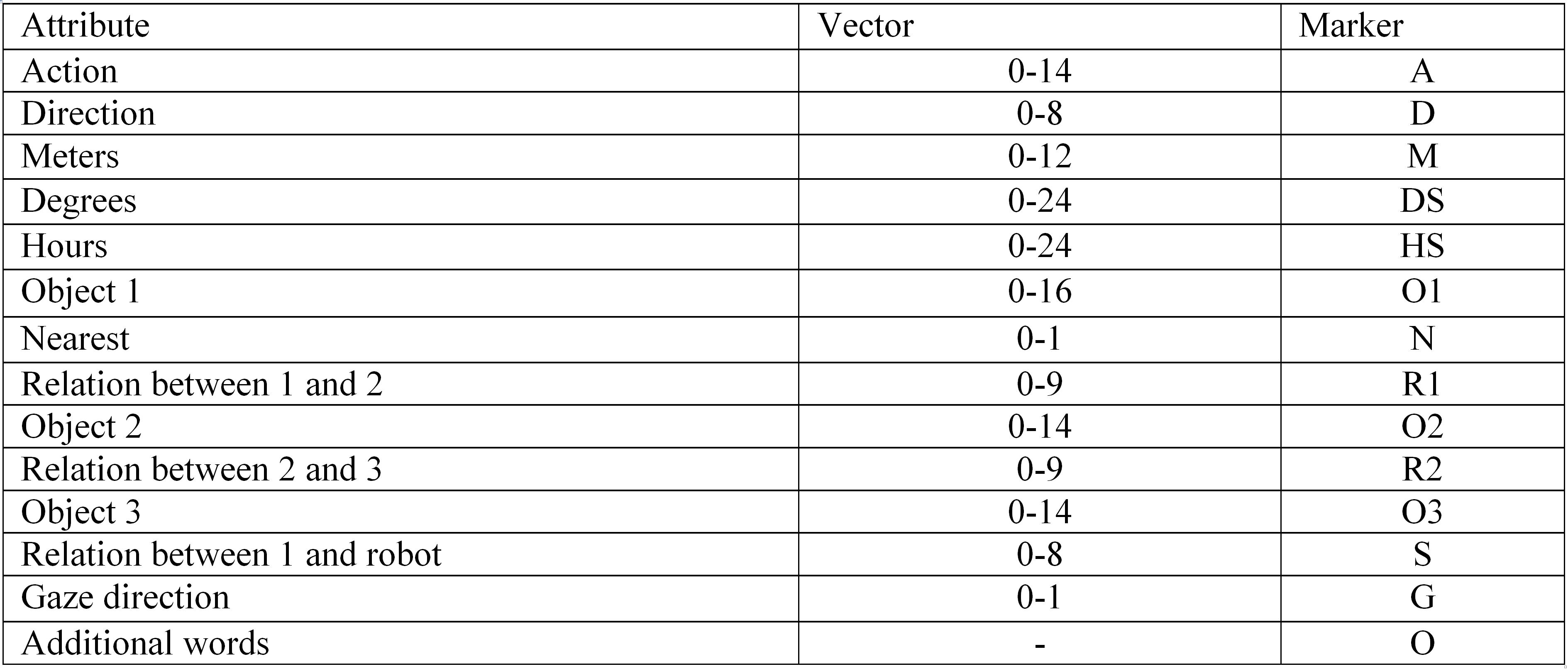

The development of high performance human-machine interface systems for controlling robotic platforms by natural language is a relevant task in interdisciplinary field «Human-Robot Interaction». In particular, it is in demand, when the robotic platform is controlled by an operator without any skills necessary to use specialized control tools. The paper describes a complex Russian language commands processing into a formalized RDF graph format to control a robotic platform. In this processing, neural network models are consistently used to search and replace pronouns in commands, restore missing verbs-actions, decompose a complex command with several actions into simple commands with only one action and classify simple command attribute. State-of-the-art solutions are applied as neural network models in this work. It is language models based on deep neural networks transformer architecture. The previous our papers show synthetic datasets based on developed generator of Russian language text commands, data based on crowdsourcing technologies and data from open sources for each of the described stages of processing. These datasets were used to fine-tune the language models of the neural networks. In this work, the resulting fine-tuned language models are implemented into the interface. The impact of the stage of searching and replacing pronouns on the efficiency of command conversion are evaluated. Using the virtual three-dimensional robotic platform simulator created at the National Research Center «Kurchatov Institute», the high efficiency of complex Russian language commands processing as part of a human-machine interface system is demonstrated.

Figures

Sboev, A. G., Gryaznov, A. V., Rybka, R. B., Skorokhodov, M. S. and Moloshnikov, I. A. (2023). A deep learning method based on language models for processing natural language Russian commands in human robot interaction, Research Result. Theoretical and Applied Linguistics, 9 (1), 174-191. DOI: 10.18413/2313-8912-2023-9-1-1-1

While nobody left any comments to this publication.

You can be first.

Abadi, M. et al. (2016). Tensorflow: A system for large-scale machine learning, OSDI'16: Proceedings of the 12th USENIX conference on Operating Systems Design and Implementation, 265-283. (In English)

Ahn, M. et al. (2022). Do As I Can and Not As I Say: Grounding Language in Robotic Affordances, arXiv preprint arXiv: 2204.01691. https://doi.org/10.48550/arXiv.2204.01691(In English)

Artetxe, M. and Schwenk, H. (2019). Massively multilingual sentence embeddings for zero-shot cross-lingual transfer and beyond, Transactions of the Association for Computational Linguistics, 7, 597-610. https://doi.org/10.1162/tacl_a_00288(In English)

Belkin, I. (2019). BERT finetuning and graph modeling for gapping resolution, Computational Linguistics and Intellectual Technologies: Proceedings of the International Conference “Dialogue 2019”, 63-71. (In English)

Budnikov, E. A., Toldova, S. Yu., Zvereva, D. S., Maximova, D. M. and Ionov, M. I. (2019). Ru-eval-2019: Evaluating anaphora and coreference resolution for Russian, Dialogue Evaluation, available at: https://www.dialog-21.ru/media/4689/budnikovzverevamaximova2019evaluatinganaphoracoreferenceresolution.pdf (Accessed 10 October 2022). (In English)

Cer, D. et al. (2018). Universal sentence encoder, arXiv preprint arXiv: 1803.11175. https://doi.org/10.48550/arXiv.1803.11175(In English)

Chaplot, D. S., Gandhi, D., Gupta, A. and Salakhutdinov, R. (2020). Object Goal Navigation using Goal-Oriented Semantic Exploration, arXiv preprint arXiv: 2007.00643. https://doi.org/10.48550/arXiv.2007.00643(In English)

Choi, D. and Langley, P. (2018). Evolution of the Icarus Cognitive Architecture, Cognitive Systems Research, 25-38. https://doi.org/10.1016/j.cogsys.2017.05.005(In English)

Choi, D., Shi, W., Liang, Y. S, Yeo, K. H. and Kim, J-J. (2021). Controlling Industrial Robots with High-Level Verbal Commands, International Conference on Social Robotics (ICSR 2021), Social Robotics, 216-226. https://doi.org/10.1007/978-3-030-90525-5_19(In English)

Chowdhery, A. et al. (2022). PaLM: Scaling Language Modeling with Pathways, arXiv preprint arXiv: 2204.02311. https://doi.org/10.48550/arXiv.2204.02311(In English)

Devlin, J., Chang, M-W., Lee, K. and Toutanova, K. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding, arXiv preprint arXiv: 1810.04805. https://doi.org/10.48550/arXiv.1810.04805(In English)

Feng, F., Yang, Y., Cer, D., Arivazhagan, N and Wang, W. (2022). Language-agnostic bert sentence embedding, Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 878-891. http://dx.doi.org/10.18653/v1/2022.acl-long.62(In English)

Gubbi, S. V., Upadrashta, R. and Amrutur, B. (2020). Translating Natural Language Instructions to Computer Programs for Robot Manipulation, arXiv preprint arXiv: 2012.13695. https://doi.org/10.48550/arXiv.2110.12302(In English)

He, K., Gkioxari, G., Doll`ar, P. and Girshick, R. B. (2017). Mask R-CNN, arXiv preprint arXiv: 1703.06870. (In English)

Hochreiter, S. and Schmidhuber, J. (1997). Long Short-term Memory, Neural computation, 9 (8), 1735-1780. https://doi.org/10.1162/neco.1997.9.8.1735(In English)

Joshi, M., Levy, O., Zettlemoyer, L. and Weld, D. (2019). BERT for Coreference Resolution: Baselines and Analysis, Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, 5803-5808. http://dx.doi.org/10.18653/v1/D19-1588(In English)

Koenig, N. and Howard, A. (2004). Design and use paradigms for Gazebo, an open-source multi-robot simulator, 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, (3), 2149-2154. DOI: 10.1109/IROS.2004.1389727 (In English)

Korobov, M. (2015). Morphological Analyzer and Generator for Russian and Ukrainian Languages, Analysis of Images, Social Networks and Texts, 320-332. https://doi.org/10.1007/978-3-319-26123-2_31 (In English)

Kuratov, Y. and Arkhipov, M. (2019). Adaptation of deep bidirectional multilingual transformers for Russian language, arXiv preprint arXiv: 1905.07213. https://doi.org/10.48550/arXiv.1905.07213(In English)

McBride, B. (2004). The Resource Description Framework (RDF) and its Vocabulary Description Language RDFS, in Staab, S. and Studer, R. (eds.), Handbook on Ontologies. International Handbooks on Information Systems, Springer, Berlin, Heidelberg, Germany, 51-65. https://doi.org/10.1007/978-3-540-24750-0_3(In English)

Min, S. Y., Chaplot, D. S., Ravikumar, P, Bisk, Y. and Salakhutdinov, R. (2021). FILM: Following Instructions in Language with Modular Methods, arXiv preprint arXiv: 2110.07342. https://doi.org/10.48550/arXiv.2110.07342(In English)

Quigley, M., Conley, K., Gerkey, B. P., Faust, J., Foote, T., Leibs, J., Wheeler, R. and Ng, A. Y. (2009). ROS: an open-source Robot Operating System, Workshops at the IEEE International Conference on Robotics and Automation. (In English)

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D. and Sutskever, I. (2019). Language Models Are Unsupervised Multitask Learners, OpenAI. (In English)

Raffel, C., Shazeer, N. and Roberts, A. (2019). Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer, arXiv preprint arXiv: 1910.10683. https://doi.org/10.48550/arXiv.1910.10683(In English)

Sboev, A. G., Gryaznov, A. V., Rybka, R. B., Skorokhodov, M. S. and Moloshnikov, I. A. (2022). Neural network interface for converting complex Russian-language text commands into a formalized graph form for controlling robotic devices, Vestnik Natsional`nogo Issledovatel’skogo Yadernogo Universiteta MIPHI, 11 (2), 153-163. DOI: 10.56304/S2304487X22020092 (In Russian)

Sboev, A., Rybka, R. and Gryaznov, A. (2020). Deep Neural Networks Ensemble with Word Vector Representation Models to Resolve Coreference Resolution in Russian, Advanced Technologies in Robotics and Intelligent Systems, 34-35. https://doi.org/10.1007/978-3-030-33491-8_4 (In English)

Smurov, I. M., Ponomareva, M., Shavrina, T. O. and Droganova, K. (2019). Agrr-2019: Automatic gapping resolution for Russian, Computational Linguistics and Intellectual Technologies, 561-575. (In English)

Van Rossum, G. and Drake, F. L. (2009). Python 3 Reference Manual, CreateSpace, Scotts Valley, CA. (In English)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L. and Polosukhin, I. (2017). Attention Is All You Need, arXiv preprint arXiv: 1706.03762. https://doi.org/10.48550/arXiv.1706.03762(In English)

Williams, A., Nangia, N. and Bowsman, S. R. (2017). A broad-coverage challenge corpus for sentence understanding through inference, arXiv preprint arXiv: 1704.05426. https://doi.org/10.48550/arXiv.1704.05426(In English)

Xue, L., Constant, N., Roberts, A., Kale, M., Al-Rfou, R., Siddhant, A., Barua, A. and Raffel, C. (2020). mT5: A massively multilingual pre-trained text-to-text transformer, arXiv preprint arXiv: 2010.11934. https://doi.org/10.48550/arXiv.1703.06870(In English)