Using neural network technologies in determining the emotional state of a person in oral communication

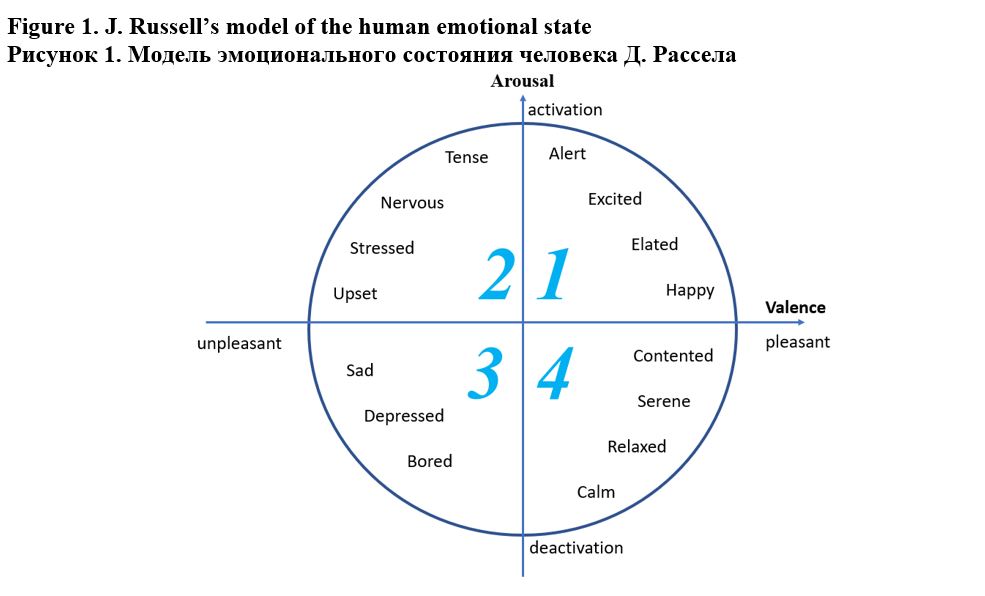

Human oral speech often has an emotional connotation; this is due to the fact that emotions and our mood influence the physiology of the vocal tract and, as a result, speech. When a person is happy, worried, sad or angry, it is reflected in various characteristics of the voice, the pace of speech and its intonation. However, assessing a person’s emotional state through speech can have a beneficial effect on various areas of life, for example, medicine, psychology, criminology, marketing and education, etc. In medicine, the use of assessing emotions by speech can help in the diagnosis and treatment of mental disorders, as well as in monitoring the emotional state of the patient, identifying diseases such as Alzheimer’s in its early stages, diagnosing autism, etc. In psychology, this method can be useful for studying emotional reactions to various stimuli and situations. In criminology, speech analysis and emotion detection can be used to detect false statements and deception. In marketing and advertising, it can help understand consumer reactions to a product or advertising campaign. In education, assessing emotions from speech can be used to analyze the emotional state of students and optimize the educational process.

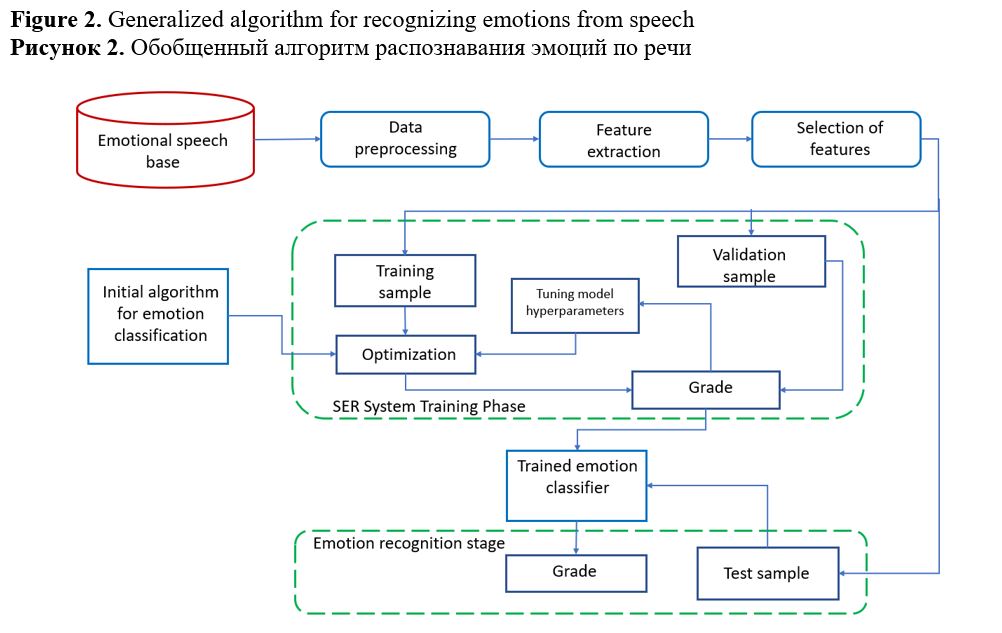

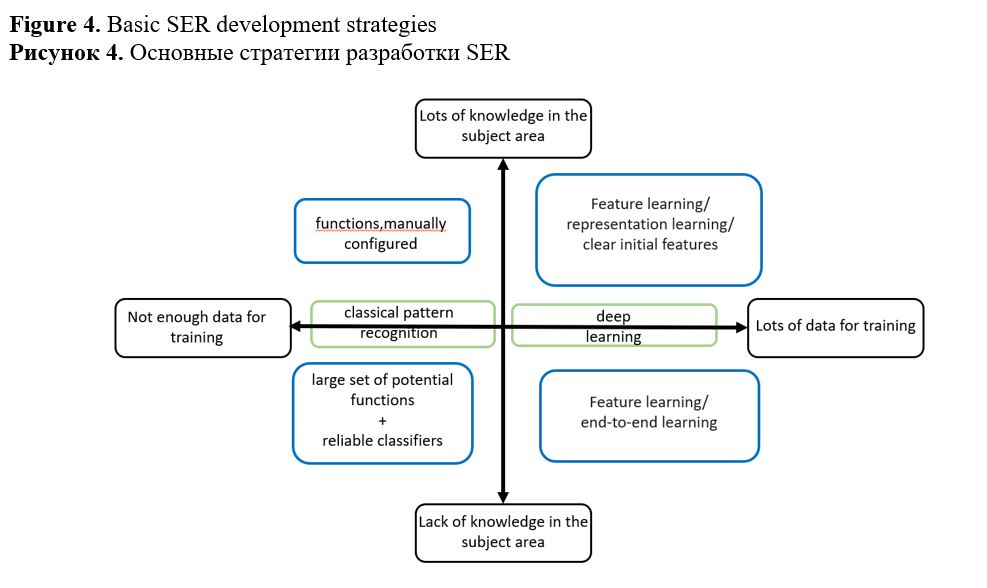

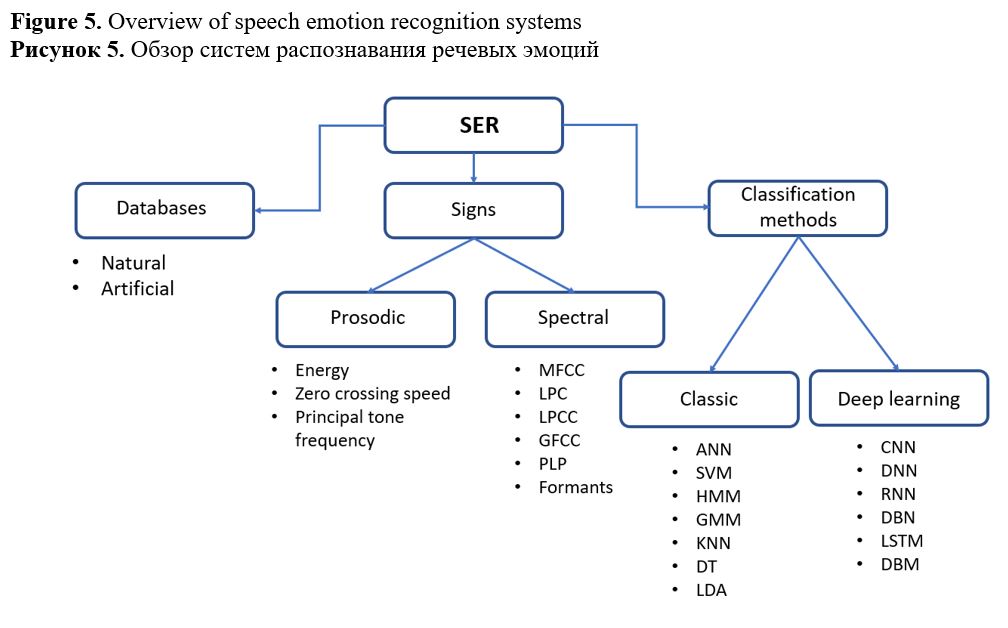

Thus, automation of the emotion recognition process is a promising area of research, and the use of various machine learning methods and image recognition algorithms can make the process more accurate and efficient.

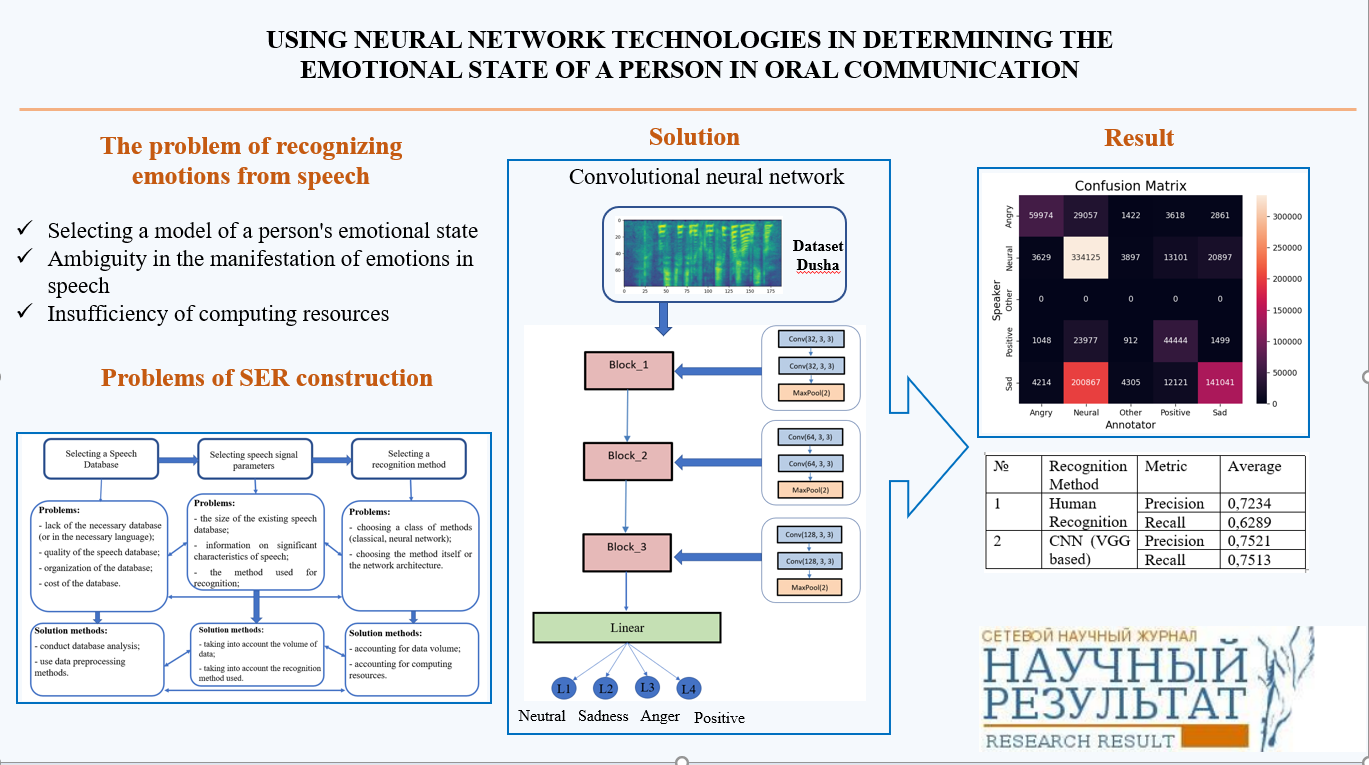

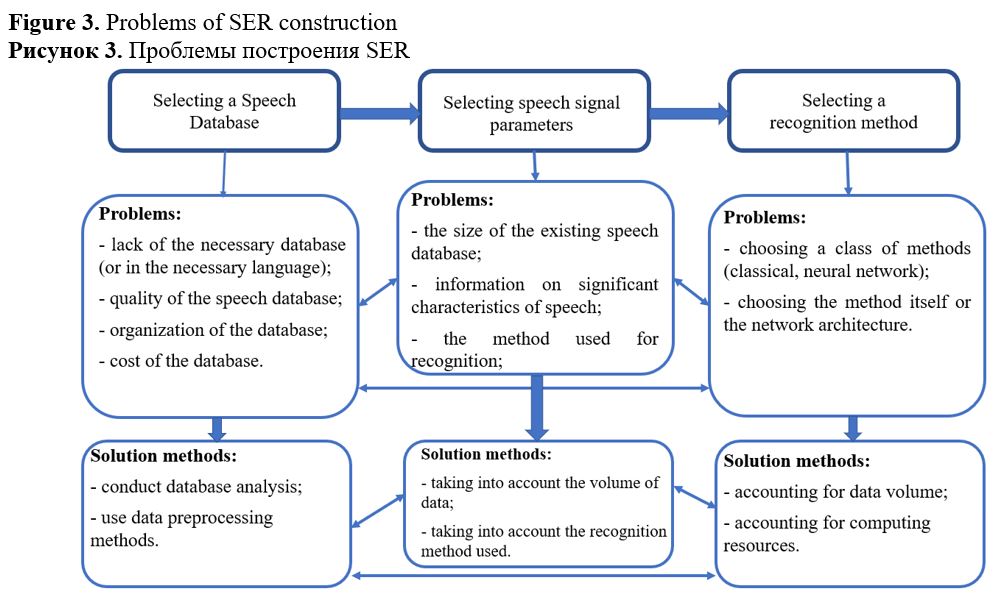

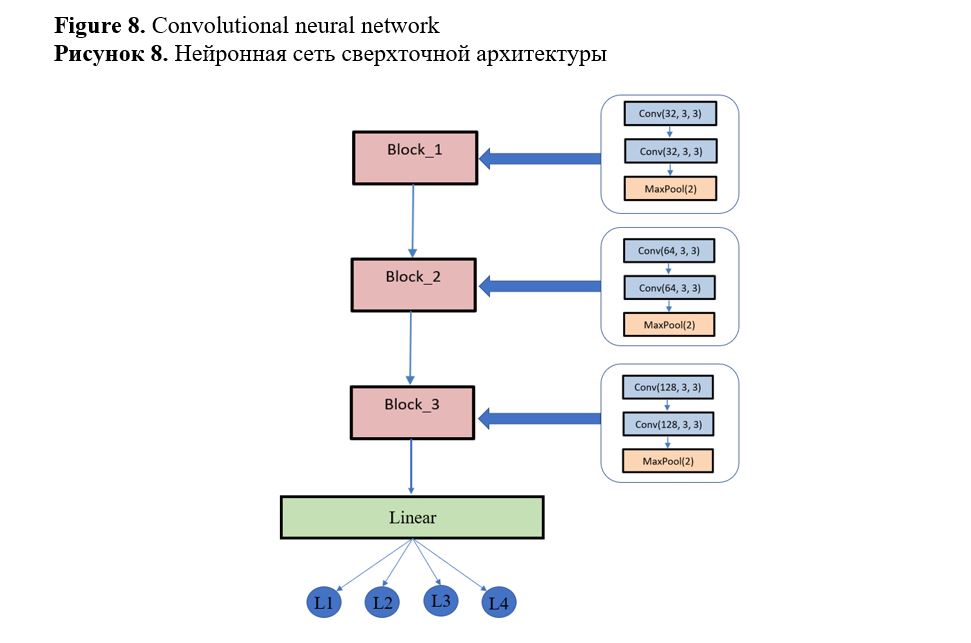

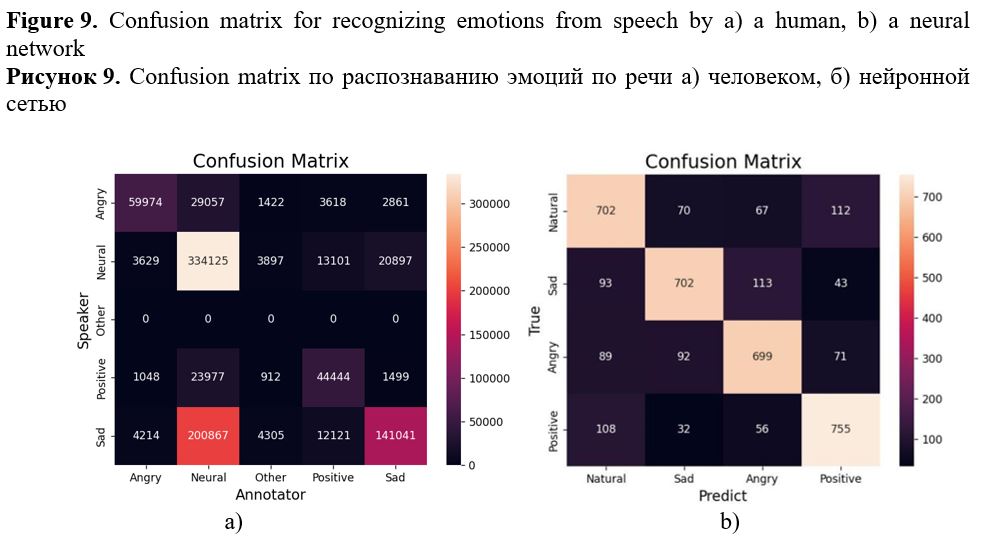

In order to address the challenge of identifying paralinguistic expressions of emotion in human speech, it is proposed that a neural network approach be employed. This methodology has demonstrated efficacy in addressing complex problems where an exact solution may be elusive. The work presents a neural network of convolutional architecture that allows to recognize four human emotions (sadness, joy, anger, neutral) from spoken speech. Particular attention is paid to the formation of a dataset for training and testing the model, since at present there are practically no open speech databases for the study of paralinguistic phenomena (especially in Russian). This study uses the Dusha emotional speech database.

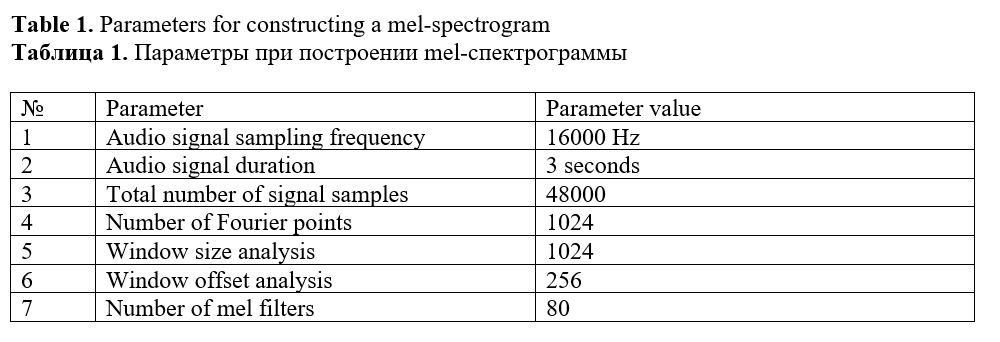

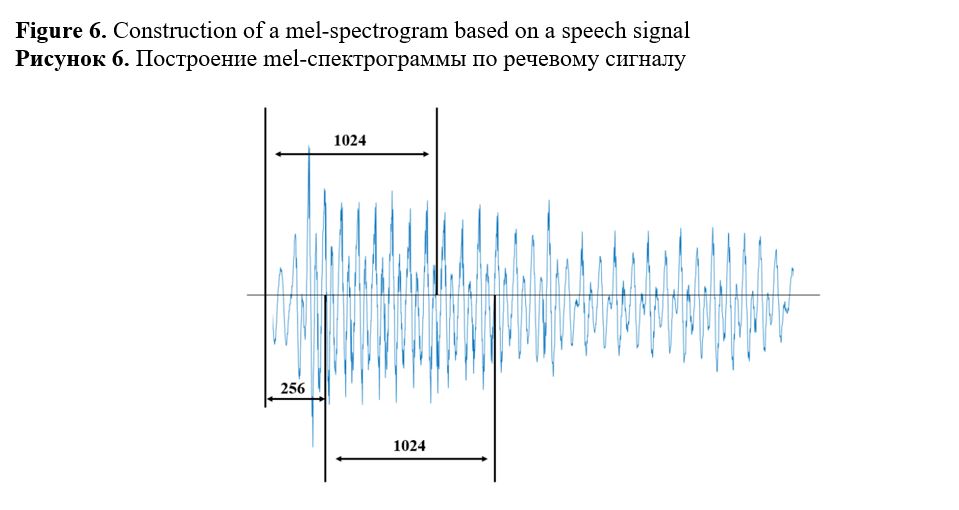

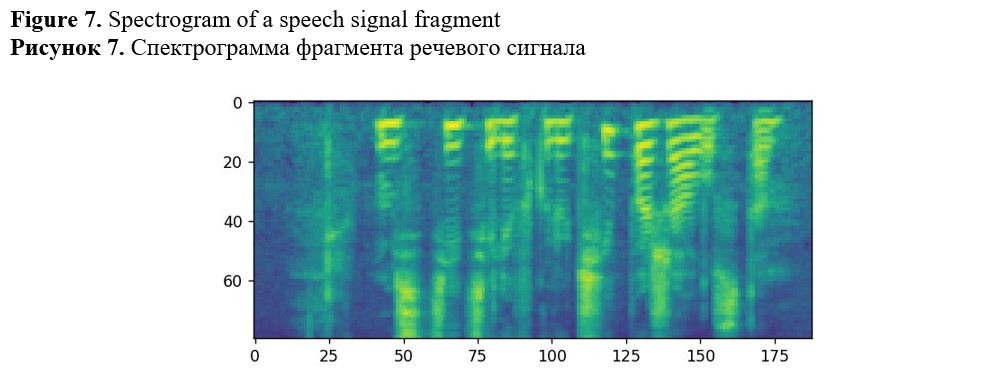

Mel-spectrograms of the speech signal are used as features for recognizing emotions, which made it possible to increase the percentage of recognition and the speed of operation of the neural network compared to the use of low-level descriptors.

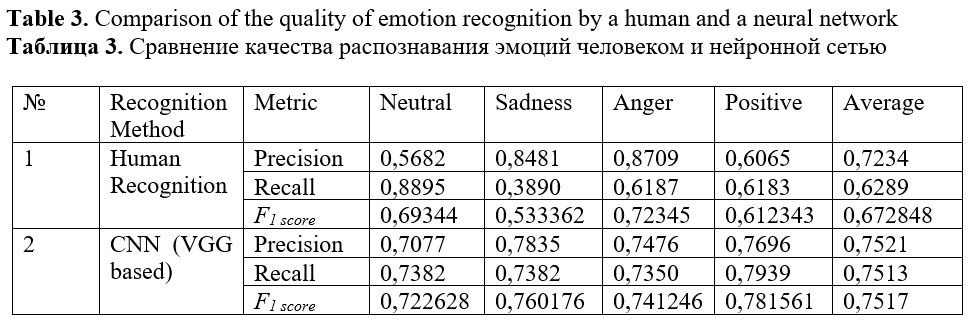

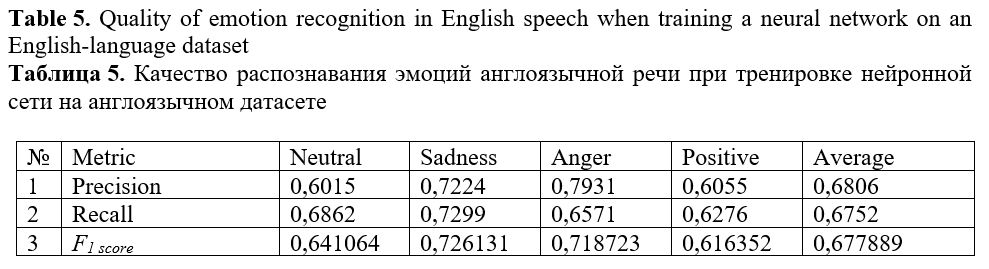

The results of experiments in the test sample showed that the presented neural network helps to recognize human emotions from oral speech in 75% of cases, which is a high result.

Further research involves training and upgrading (if necessary) the presented neural network to recognize paralinguistic phenomena not presented in this study, for example, lies, fatigue, depression, etc.

Figures

Balabanova, T. N., Gaivoronskayа, D. I., Doborovich, A. N. (2024). Using neural network technologies in determining the emotional state of a person in oral communication, Research Result. Theoretical and Applied Linguistics, 10 (4), 17–34.

While nobody left any comments to this publication.

You can be first.

Abramov, K. V., Balabanova, T. N. and Gaivoronskaya, D. I. (2024). Ispolzovanie nejronnyh setej dlja raspoznavanija agressii po rechevomu signal [Using neural networks to recognize aggression by speech signal], Information Systems and Technologies, № 2 (142), 28–36. (In Russian)

Albornoz, E. M., Milone, D. H. and Rufiner, H. L. (2011). Spoken emotion recognition using hierarchical classifiers, Computer Speech & Language, 25 (3), 556–570. (In English)

Ayadi, M. El., Kamel, M. S. and Karray, F. (2011). Survey on speech emotion recognition: Features, classification schemes, and databases, Pattern Recognition, 44 (3), 572–587. (In English)

Balabanova, T. N., Abramov, K. V. (2023). Paralingvisticheskij analiz dlja raspoznavanija agressii po rechi cheloveka [Paralinguistic analysis for recognizing aggression from human speech], Naukoemkie tehnologii i innovacii (XXV nauchnye chtenija): Sbornik dokladov Mezhdunarodnoj nauchno-prakticheskoj konferencii, Belgorod, Belgorodskij gosudarstvennyj tehnologicheskij universitet im. V. G. Shuhova, 697–700. (In Russian)

Balabanova, T. N., Abramov, K. V., Boldyshev, A. V. and Dolbin, D. M. (2023). Automatic Detection of Anger and Aggression in Speech Signals, Economics. Information technologies, 50 (4), 944–954. DOI: 10.52575/2687-0932-2023-50-4-944-954 (In Russian)

Chen, L., Mao, X., Xue, Y. and Cheng, L. L. (2012). Speech emotion recognition: Features and classification models, Digital Signal Processing, 22 (6), 1154–1160. (In English)

Cowie, R., Douglas-Cowie, E., Tsapatsoulis, N., Votsis, G., Kollias, S., Fellenz, W. and Taylor, J. G. (2001). Emotion recognition in human-computer interaction, IEEE Signal Processing Magazine. 18 (1), 32–80. (In English)

Dellaert, F., Polzin, T. and Waibel, A. (1996). Recognizing emotion in speech, Proceeding of Fourth International Conference on Spoken Language Processing(ICSLP), 1970–1973. (In English)

Dvoynikova, A. A., Karpov, A. A. (2020). Analiticheskij obzor podhodov k raspoznavaniju tonal’nosti russkojazychnyh tekstovyh dannyh [An analytical review of approaches to recognizing the tonality of Russian-language text data], Informacionno-upravljajushhie sistemy, 4 (107), 20–30. DOI: 10.31799/1684-8853-2020-4-20-30 (In Russian)

Fedotov, D., Kaya, H. and Karpov A. (2018). Context Modeling for Cross-Corpus Dimensional Acoustic Emotion Recognition: Challenges and Mixup, Proceedings of 20th International Conference on Speech and Computer (SPECOM-2018), 155–165. DOI: 10.1007/978-3-319-99579-3_17 (In English)

Gorshkov, Yu. G., Dorofeev, A. V. (2003). Rechevye detektory lzhi kommercheskogo primenenija [Speech lie detectors for commercial use], Informacionnyj most (INFORMOST). Radiojelektronika i Telekommunikacija, 6, 13–15. (In Russian)

Grimm, M., Kroschel, K., Mower, E. and Narayanan, S. (2007). Primitives-based evaluation and estimation of emotions in speech, Speech Communication, 49 (10–11), 787–800. (In English)

Holden, K. T. and Hogan, J. T. (1993). The emotive impact of foreign intonation: An experiment in switching English and Russian intonation, Language and Speech, 36 (1), 67–88. (In English)

Hozjan, V. and Kačič, Z. (2003). Context-Independent Multilingual Emotion Recognition from Speech Signals, International Journal of Speech Technology, 6, 311–320. (In English)

Hsu, W. N., Bolte, B., Tsai, Y.-H. H., Lakhotia, K., Salakhutdinov, R., Mohamed, A.-r. (2021). Hubert: Self-supervised speech representation learning by masked prediction of hidden units, IEEE/ACM Transactions on Audio, Speech, and Language Processing, 29, 3451–3460. (In English)

Kerkeni, L., Serrestou, Y., Mbarki, M., Raoof, K., Ali Mahjoub, M. and Cleder, C. (2020). Automatic Speech Emotion Recognition Using Machine Learning, Virginia Commonwealth University, United States of America. (In English)

Kim, J., Truong, K. P., Englebienne, G., Evers, V. (2017). Learning spectro-temporal features with 3D CNNs for speech emotion recognition, Proceedings of the 7th International Conference on Affective Computing and Intelligent Interaction (ACII), 383–388. DOI: 10.1109/ACII.2017.8273628 (In English)

Lemaev, V. I., Lukashevich, N. V. (2024). Avtomaticheskaja klassifikacija jemocij v rechi: metody i dannye [Automatic classification of emotions in speech: methods and data], Litera, 4, 159–173. DOI: 10.25136/2409-8698.2024.4.70472 (In Russian)

Makarova, V. (2000). Acoustic cues of surprise in Russian questions, Journal of the Acoustical Society of Japan (E), 21 (5), 243–250. DOI: 10.1250/ast.21.243 (In English)

Maysak, N. V. (2010). Matrica social’nyh deviacij: klassifikacija tipov i vidov deviantnogo povedenija [The matrix of social deviations: classification of types and types of deviant behavior], Sovremennye problemy nauki i obrazovanija, 4, 78–86. (In Russian)

Neiberg, D., Elenius, K. and Laskowski, K. (2006). Emotion recognition in spontaneous speech using GMMs, INTERSPEECH 2006 – ICSLP, Ninth International Conference on Spoken Language Processing, 809–812. (In English)

New, T. L., Foo, S. W. and De Silva, L. C. (2003). Speech emotion recognition using hidden Markov models, Speech Communication, 41 (4), 603–623. (In English)

Nogueiras, A., Moreno, A., Bonafonte, A., Mariño, J.B. (2001) Speech emotion recognition using hidden Markov models, Proceedings of EUROSPEECH 2001, 7th European conference on speech communication and technology, 746–749. (In English)

Perepelkina, O., Kazimirova, E., Konstantinova, M. (2018). RAMAS: Russian Multimodal Corpus of Dyadic Interaction for studying emotion recognition, PeerJ Preprints, 6:e26688v1. https://doi.org/10.7287/peerj.preprints.26688v1(In English)

Russell, J. A, Posner, J., Peterson, B. S. (2005). The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology, Dev Psychopathol. 17 (3), 715-34. DOI: 10.1017/S0954579405050340 (In English)

Raudys, S. (2003). On the universality of the single-layer perceptron model, Neural Networks and Soft Computing. Physica, Heidelberg, 79–86. (In English)

Sadiq, S., Mehmood, A., Ullah, S., Ahmad, M., Sang Choi, G., On, Byung-Won. (2021). Aggression detection through deep neural model on twitter, Future Generation Computer Systems, 114, 120–129. (In English)

Sahoo, S., Routray, A. (2016). Detecting aggression in voice using inverse filtered speech features, IEEE Transactions on Affective Computing, 9 (2), 217–226. DOI: 10.1109/TAFFC.2016.2615607 (In English)

Santos, F., Durães, D., Marcondes, F. M., Hammerschmidt, N., Lange, S., Machado, J., Novais, P. (2021). In-car violence detection based on the audio signal, Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning. Springer, 437–445. https://doi.org/10.1007/978-3-030-91608-4_43(In English)

Shakhovsky, V. I. (2009). Jemocii kak obekt issledovanija v lingvistike [Emotions as an object of research in linguistics], Voprosy psiholingvistiki, 9, 29–43. (In Russian)

Siging, W. (2009). Recognition of human emotion in speech using modulation spectral features and support vector machines: master of science thesis, Department of Electrical and Computer Engineering Queen’s University, Kingston, Ontario. (In English)

Surabhi, V., Saurabh, M. (2016). Speech emotion recognition. A review, International Research Journal of Engineering and Technology (IRJET), 03, 313–316. (In English)

Svetozarova, N. D. (1982). Intonacionnaja sistema russkogo jazyka [Intonation system of the Russian language], Izd-vo Len. un-ta, Leningrad. (In Russian)

Uzdyaev, M. Yu. (2020). Nejrosetevaja model’ mnogomodal’nogo raspoznavanija chelovecheskoj agressii [Neural network model of multimodal recognition of human aggression], Vestnik KRAUNC. Fiziko-matematicheskie nauki, 33 (4), 132–149. DOI: 10.26117/2079-6641-2020-33-4-132-149 (In Russian)

Velichko, A., Markitantov, M., Kaya, H., Karpov, A. (2022). Complex Paralinguistic Analysis of Speech: Predicting Gender, Emotions and Deception in a Hierarchical Framework, Proceedings of Interspeech, 4735–4739. DOI: 10.21437/Interspeech.2022-11294 (In English)

Vu, M.T., Beurton-Aimar, M. and Marchand, S. (2021). Multitask multi-database emotion recognition, Proceedings of IEEE/CVF International Conference on Computer Vision, 3637–3644. DOI: 10.1109/ICCVW54120.2021.00406 (In English)

Wang, J., Xue, M., Culhane, R., Diao, E., Ding, J., Tarokh, V. (2020). Speech emotion recognition with dual-sequence LSTM architecture, Proceedings of International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6474–6478. DOI: 10.1109/ICASSP40776.2020.9054629 (In English)

Wu, S., Falk, T. H. and Chan, W. Y. (2011). Automatic speech emotion recognition using modulation spectral features, Speech Communication, 53, 768–785. (In English)

Zeiler, M. D., Fergus, R. (2013). Visualizing and understanding convolutional networks, Computer Vision and Pattern Recognition (ECCV 2014), 818–833. DOI: 10.48550/arXiv.1311.2901 (InEnglish)