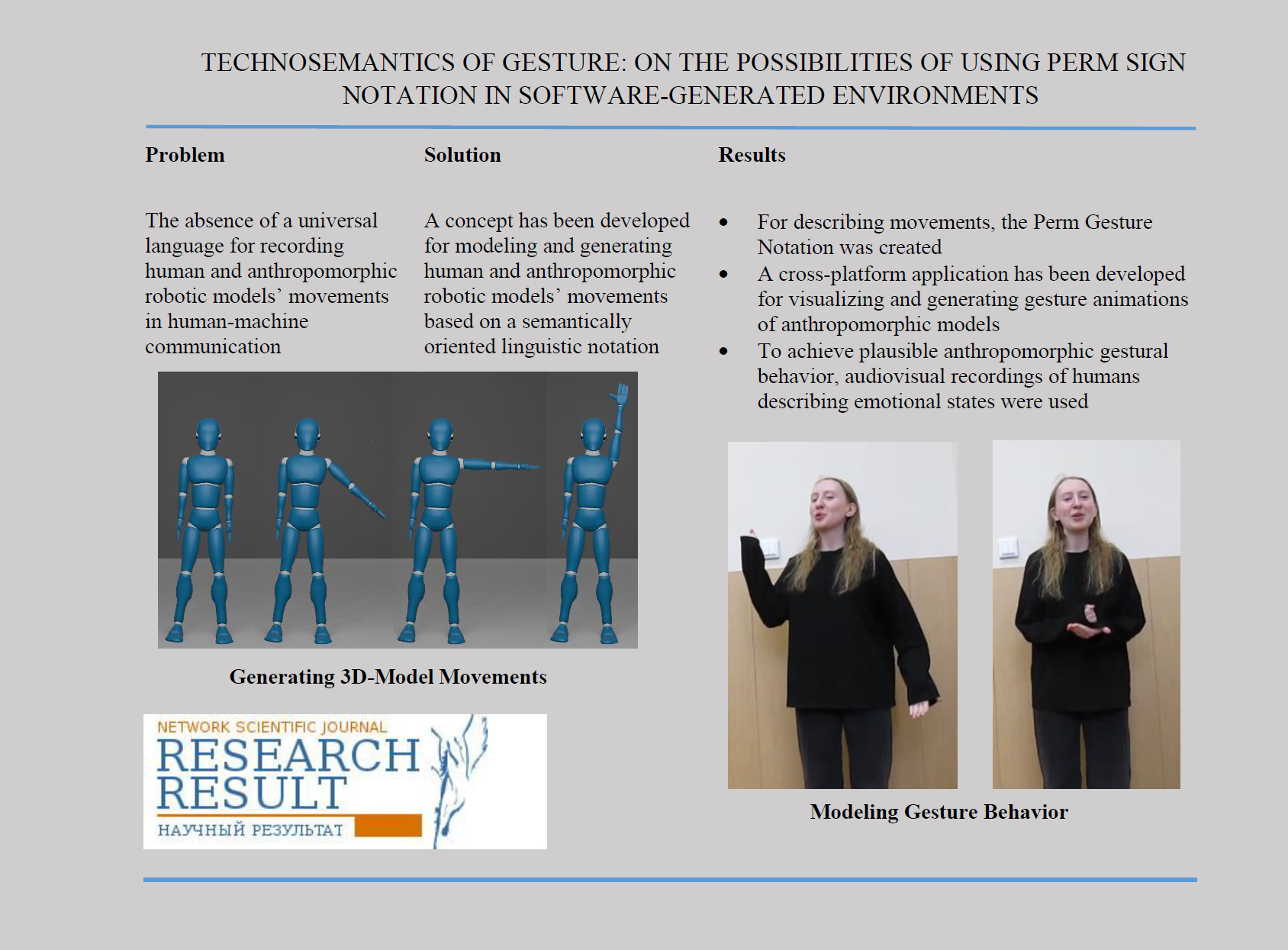

Technosemantics of gesture: on the possibilities of using Perm sign notation in software-generated environments

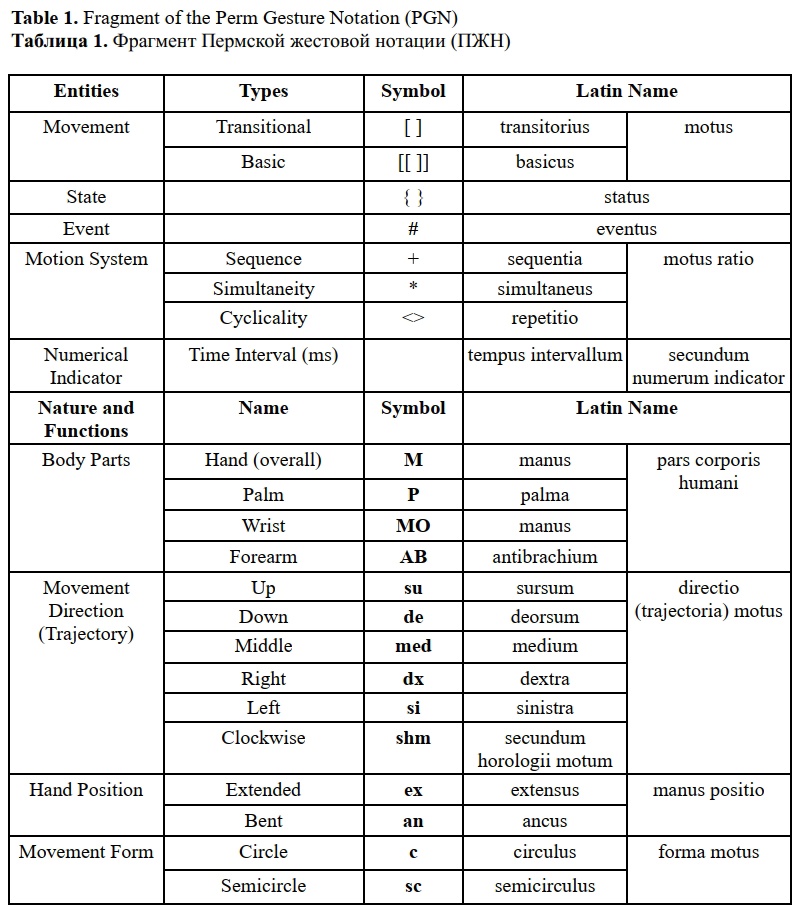

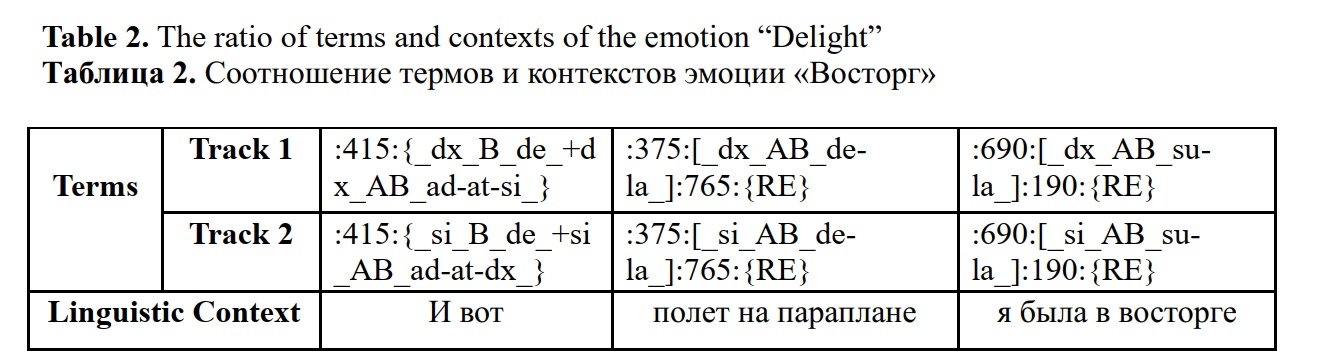

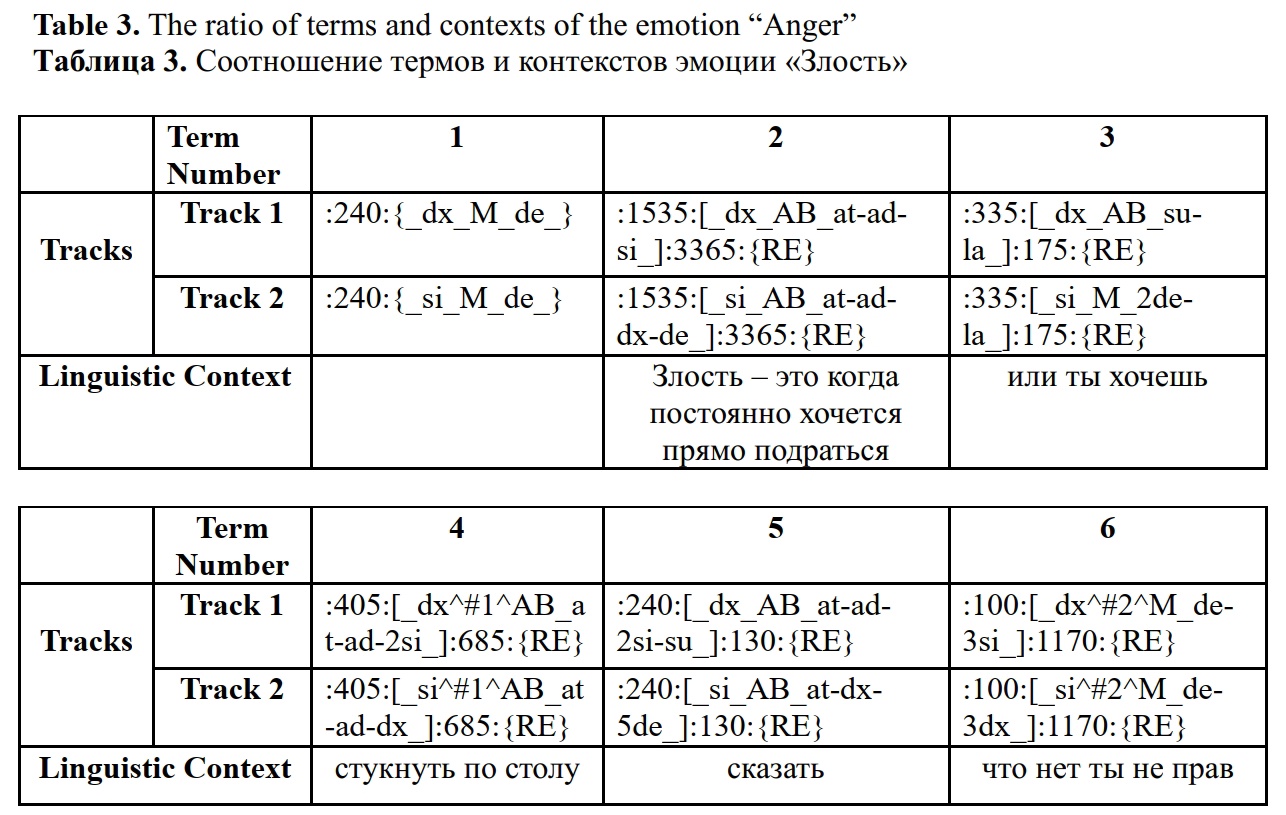

This paper is dedicated to the development of a concept and software solution for generating human movements based on a semantically-oriented language notation created by the authors. The language notation is presented as a formula with a flexible structure of concepts and rules for their implementation, allowing easy adaptation of movement parameter changes to match an ideal or real sample.

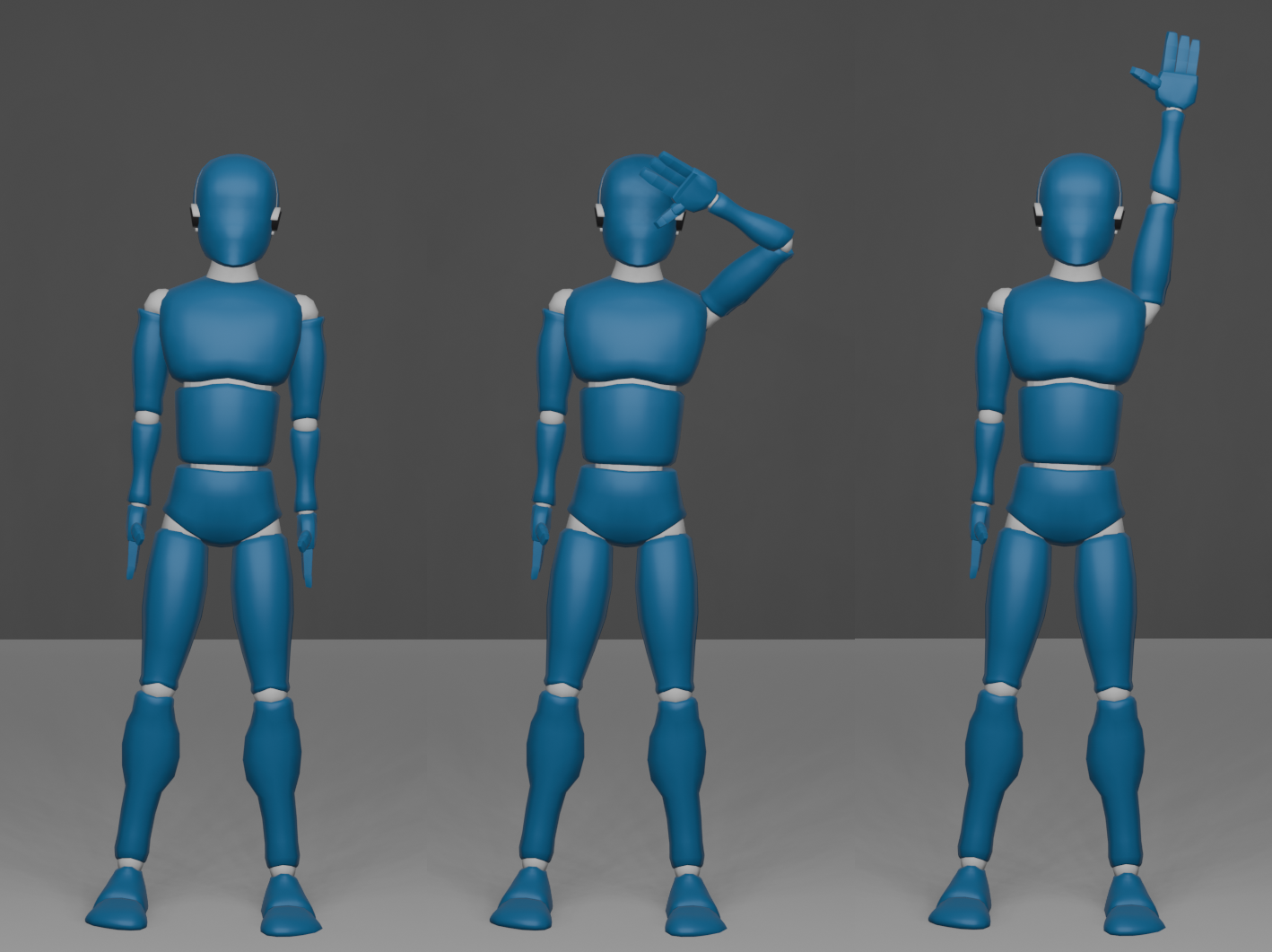

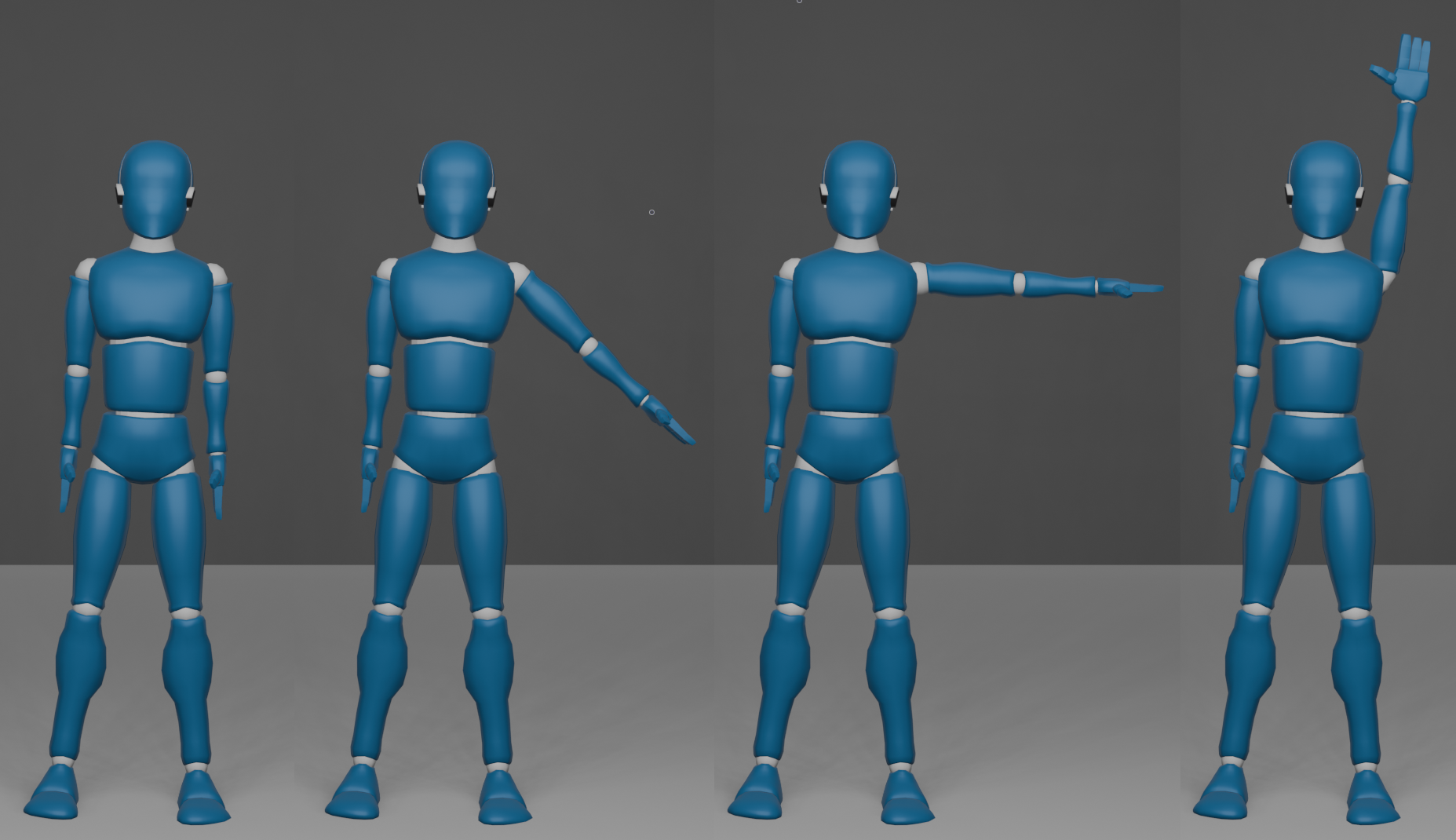

To model movements represented in the language notation, a cross-platform application was developed using the Blender 4.2 for the visualization and generation of gestures for anthropomorphic models. The movement control system consists of the following stages: translating it into a gesture notation record; parsing this record; constructing an internal representation of the movement, which is a sequence of frames. Frames contain information about which bone (body part) of the performer they refer to, how they affect its position, and at what time from the start of the movement this frame is relevant. In the final stage, the internal representation of the movement is transformed into the performer’s movement, which can be either virtual anthropomorphic 3D models or physical software-hardware systems in the form of anthropomorphic robots.

To better correspond to anthropomorphic behavior, in addition to “ideal samples” of human movement, models of human gesture behavior presented in a multimodal corpus specially created for this purpose by the team were used. The material consists of audiovisual recordings of spontaneous oral texts where recipients describe a wide range of their own emotional states. The data obtained during the experimental research confirmed the flexibility, enhanced controllability, and modularity of the language notation, as well as the ability to model the continuous space of human motor activity.

Figures

Belousov, K. I., Taleski, A., Agaev, A. R. (2024). Technosemantics of gesture: on the possibilities of using Perm sign notation in software-generated environments, Research Result. Theoretical and Applied Linguistics, 10 (4), 126–146.

While nobody left any comments to this publication.

You can be first.

Abbie, M. (1974). Movement notation, The Australian journal of physiotherapy, 20 (2), 61–69. https://doi.org/10.1016/S0004-9514(14)61177-6(In English)

Bashan, M., Einbinder, H., Harries, J., Shosani, M. and Shoval, D. (2024). Movement Notation: Eshkol and Abraham Wachmann, Verlag der Buchhandlung Walther König, Köln, Germany. (In English)

Belousov, K. I., Sazina, D. A., Ryabinin, K. V. and. Brokhin, L. Yu. (2024). Sensory Technolinguistics: On Mechanisms of Transmitting Multimodal Messages in Perceptual-Cognitive Interfaces, Automatic Documentation and Mathematical Linguistics, 58 (2), 108–116. https://doi.org/10.3103/s0005105524700079(In English)

Benesh, R. and Benesh, J. (1956). An Introduction to Benesh Dance Notation, A & C Black, London, UK. (In English)

Bernardet, U., Fdili Alaoui, S., Studd, K., Bradley, K., Pasquier, P. and Schiphorst, T. (2019) Assessing the reliability of the Laban Movement Analysis system, PLoS ONE, 14 (6): e0218179. https://doi.org/10.1371/journal.pone.0218179(In English)

Birdwhistell, R. L. (1952). Introduction to Kinesics: An Annotation System for Analysis of Body Motion and Gesture, Foreign Service Institute, Washington, DC, USA. (In English)

Bull, P. and Doody, J. P. (2013). 8 Gesture and body movement, De Gruyter eBooks, 205–228. https://doi.org/10.1515/9783110238150.205(In English)

Calvert, T. (2015). Approaches to the Representation of Human Movement: Notation, Animation and Motion Capture, Dance Notations and Robot Motion, Springer Tracts in Advanced Robotics, 111, 49–68. https://doi.org/10.1007/978-3-319-25739-6_3(In English)

Dael, N., Mortillaro, M. and Scherer, K. R. (2012). The Body Action and Posture Coding System (BAP): Development and Reliability, Journal of Nonverbal Behavior, 36 (2), 97–121. https://doi.org/10.1007/s10919-012-0130-0(In English)

Dell, C. (1977). A Primer for Movement Description: Using Effort-shape and Supplementary Concepts, Dance Notation Bureau Press, New York, USA. (In English)

Duprey, S., Naaim, A., Moissenet, F., Begon, M. and Chèze, L. (2017). Kinematic models of the upper limb joints for multibody kinematics optimisation: An overview, Journal of Biomechanics, 62, 87–94. DOI: 10.1016/j.jbiomech.2016.12.005 (In English)

Ekman, P. and Friesen, W. V. (1978). Facial Action Coding System, Consulting Psychologists, Palo Alto, CA, USA. (In English)

El Raheb, K. and Ioannidis, Y. (2014). From dance notation to conceptual models: a multilayer approach, Proceedings of the 2014 International Workshop on Movement and Computing, MOCO, ACM, New York, 25–30. (In English)

El Raheb, K., Buccoli, M., Zanoni, M., Katifori, A., Kasomoulis, A., Sarti, A. and Ioannidis, Y. (2023). Towards a general framework for the annotation of dance motion sequences, Multimed Tools Appl, 82, 3363–3395. https://doi.org/10.1007/s11042-022-12602-y(In English)

Eshkol, N. and Wachmann, A. (1958). Movement Notation, Weidenfeld and Nicolson, London, UK. (In English)

Farnell, B. M. (1996). Movement Notation Systems, The World’s Writing Systems, in Daniels P. T. (ed.), 855–879. (In English)

Frishberg, N. (1983). Writing systems and problems for sign language notation, Journal for the Anthropological Study of Human Movement, 2 (4), 169–195. (In English)

Frey, S., Hirsbrunner, H-P. and Jorns, U. (1982). Time-Series Notation: A Coding Principle for the Unified Assessment of Speech and Movement in Communication Research, Gunter NarrVerlag, Tübingen, Germany. (In English)

Grushkin, D. A. (2017). Writing Signed Languages: What For? What Form?, American Annals of the Deaf, 161 (5), 509–527. https://doi.org/10.1353/aad.2017.0001(In English)

Guest, A. H. (1984). Dance Notation: The Process of Recording Movement on Paper, Dance Horizons, New York, USA. (In English)

Guest, A. H. (2005). Labanotation: The System of Analyzing and Recording Movement (4th ed.), Routledge, New York, USA. https://doi.org/10.4324/9780203823866(In English)

Harrigan, J. A. (2008). Proxemics, Kinesics, and Gaze, The New Handbook of Methods in Nonverbal Behavior Research, 136–198. https://doi.org/10.1093/acprof:oso/9780198529620.003.0004(In English)

Izquierdo, C. and Anguera, M. T. (2018). Movement notation revisited: syntax of the common morphokinetic alphabet (CMA) system, Front. Psychol, 9:1416. https://doi.org/10.3389/fpsyg.2018.01416(In English)

Karg, M., Samadani, A.-A., Gorbet, R., Kuhnlenz, K., Hoey, J. and Kulic, D. (2013). Body Movements for Affective Expression: A Survey of Automatic Recognition and Generation, IEEE Transactions on Affective Computing, 4 (4), 341–359. https://doi.org/10.1109/t-affc.2013.29(In English)

Kendon, A. (1997). Gesture, Annual Review of Anthropology, 26 (1), 109–128. https://doi.org/10.1146/annurev.anthro.26.1.109(In English)

Key, M. R. (1977). Nonverbal communication: a research guide and bibliography, The Scarecrow Press, Metuchen, N.J., USA. (In English)

Kilpatrick, C. E. (2020). Movement, Gesture, and Singing: A Review of Literature. Update: Applications of Research in Music Education, 38 (3), 29-37. DOI: 10.1177/8755123320908612 (In English)

Laban, R. von and Lawrence, F. C. (1974). Effort: Economy of Human Movement 2nd ed., Macdonald & Evans, London, UK. (In English)

Laumond, J. and Abe, N. (2016). Dance Notations and Robot Motion, Springer International Publishing AG, Cham (ZG), Switzerland. https://doi.org/10.1007/978-3-319-25739-6(In English)

Liu, H., Zhu, Z., Iwamoto, N., Peng, Y., Li, Zh., Zhou, Y., Bozkurt, E. and Zheng, B. (2022). BEAT: A Large-Scale Semantic and Emotional Multi-modal Dataset for Conversational Gestures Synthesis, Computer Vision – ECCV 2022, 612–630. https://doi.org/10.48550/arXiv.2203.05297(In English)

Murillo, E., Montero, I. and Casla, M. (2021). On the multimodal path to language: The relationship between rhythmic movements and deictic gestures at the end of the first year, Frontiers in Psychology, 12, 1–8. https://doi.org/10.3389/fpsyg.2021.616812(In English)

Novack, A. M. and Wakefield, E. M. (2016). Goldin-Meadow S. What makes a movement a gesture?, Cognition, 146, 339-348. https://doi.org/10.1016/j.cognition.2015.10.014(In English)

Qi, X., Liu, C., Li, L., Hou, J., Xin, H. and Yu, X. (2024). Emotion Gesture: Audio-Driven Diverse Emotional Co-Speech 3D Gesture Generation, IEEE Transactions on Multimedia, 1–11. https://doi.org/10.1109/TMM.2024.3407692(In English)

Streeck, J. (2010). The Significance of Gesture: How it is Established, Papers in Pragmatics; 2 (1-2). https://doi.org/2.10.1075/iprapip.2.1-2.03str(In English)

Shafir, T., Tsachor, R. and Welch, K. B. (2016). Emotion Regulation through Movement: Unique Sets of Movement Characteristics are Associated with and Enhance Basic Emotions, Frontiers in Psychology, 6. https://doi.org/10.3389/fpsyg.2015.02030(In English)

Stults-Kolehmainen, M. A. (2023). Humans have a basic physical and psychological need to move the body: Physical activity as a primary drive, Frontiers in Psychology, 14. https://doi.org/10.3389/fpsyg.2023.1134049(In English)

Tonoli, R. L., Costa, P. D. P., Marques, L. B. d. M. M. and Ueda, L. H. (2024). Gesture Area Coverage to Assess Gesture Expressiveness and Human-Likeness’, International Conference on Multimodal Interaction (ICMI Companion ‘24), 4–8 November 2024, San Jose, Costa Rica. ACM, New York, NY, USA. https://doi.org/10.1145/3686215.3688822(In English)

Trujillo, J. P., Vaitonyte, J., Simanova, I. and Özyürek, A. (2018). Toward the markerless and automatic analysis of kinematic features: A toolkit for gesture and movement research, Behavior Research Methods, 51 (2), 769–777. https://doi.org/10.3758/s13428-018-1086-8(In English

Van Elk, M., van Schie, H. T. and Bekkering, H. (2009). Short-term action intentions overrule long-term semantic knowledge, Cognition, 111 (1), 72–83. https://doi.org/10.1016/j.cognition.2008.12.002(In English))

Yang, S., Wu, Z., Li, M., Zhang, Z., Hao, L., Bao, W. and Zhuang, H. (2023). QPGesture: Quantization-Based and Phase-Guided Motion Matching for Natural Speech-Driven Gesture Generation, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2321–2330. https://doi.org/10.48550/arXiv.2305.11094(In English)

Yoon, Y., Cha, B., Lee, J.-H., Jang, M., Lee, J., Kim, J. and Lee, G. (2020). Speech Gesture Generation from the Trimodal Context of Text, Audio, and Speaker Identity, ACM Transactions on Graphics, 39 (6). https://doi.org/10.1145/3414685.3417838(In English)

Zhi, Y., Cun, X., Chen, X., Shen, X., Guo, W., Huang, S. and Gao, S. (2023). LivelySpeaker: Towards Semantic-Aware Co-Speech Gesture Generation, Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 20807–20817. https://doi.org/10.1109/ICCV51070.2023.01902(In English)